对话模型定义了用户可以对您的 Action 说什么,以及您的 操作会响应用户。对话模型的主要构建块 是 intent、type、scenes 和 提示。你的某个 Action 被调用后,Google 助理 用户递交该 Action,该 Action 便会开始与 您可以基于对话模型来设计用户。对话模型包括:

有效的用户请求 - 要定义用户可以对您的 Action 说出哪些指令,您需要 创建一组意图来增强 Google 助理 NLU, 了解专门针对您的 Action 的请求。每个意图定义了 描述用户可以说出什么来匹配相应意图的训练短语。通过 Google 助理 NLU 会扩展这些训练短语以包含相似的短语,以及 这些短语的聚合形成意图的语言模型。

操作逻辑和响应 - 场景会处理 intent、执行所需的逻辑并生成要返回到用户的提示。

定义有效的用户请求

如需定义用户可以对 Action 说出的内容,您可以使用一组 intent 和类型。借助用户 intent 和类型,你可以使用 自己的语言模型。利用系统 intent 和类型, 语言模型和事件检测,例如想退出您的 Action 或 Google 助理未检测到任何输入

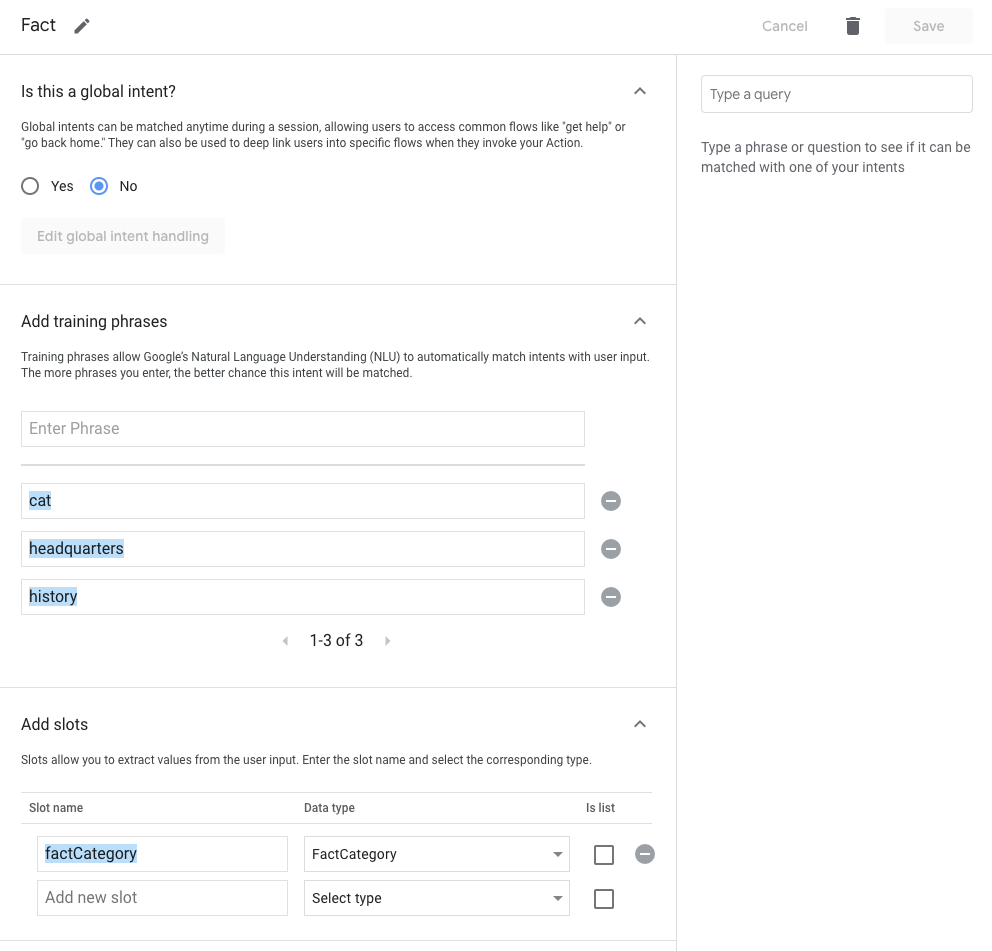

创建用户 intent

借助用户意图,您可以定义自己的训练短语,以定义用户的 可能会向你的 Action 发出的语音指令。Google 助理 NLU 使用这些指令进行自我训练 了解用户所说的内容如果用户说出的内容与 用户意图的语言模型,Google 助理会匹配该意图并通知 您的 Action,以便执行逻辑并对用户做出响应。

<ph type="x-smartling-placeholder">

如需创建用户 intent,请执行以下操作:

- 在开发标签页中,点击用户意图 >&CirclePlus;(新意图)、 指定名称,然后按 Enter 创建 intent。

- 在左侧菜单中点击新创建的意图。Intent 编辑器 。

- 将训练短语添加到意图中。您应该添加尽可能多的训练短语 来训练 Google 助理 NLU。

- 可选:为训练短语添加注释,以指示 Google 助理 NLU 解析

并从用户输入中提取与指定类型匹配的类型化参数:

<ph type="x-smartling-placeholder">

- </ph>

- 在添加新参数字段中输入参数的名称。

- 从下拉菜单中选择一个系统类型,或创建自定义类型。

- 指定参数是否为列表。这样,参数就可以收集 同一类型的多个值。

- 在 Add trainingPhrase 部分,突出显示要添加的文本。 应用该类型。这会指示 Google 助理 NLU 处理突出显示的 text 作为参数。如果用户说出的内容与该类型匹配,则 NLU 会 提取该值作为参数。

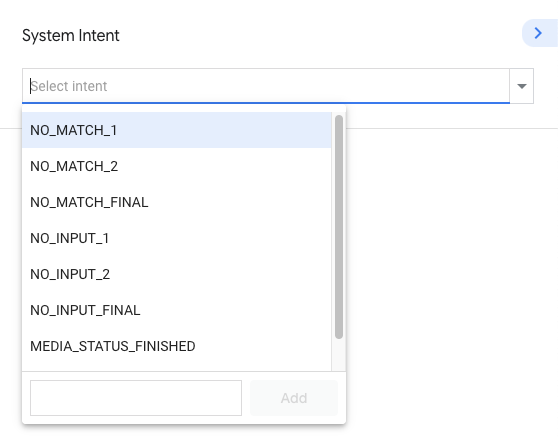

创建系统 intent

通过系统 intent,您可以将 intent 与预定义的语言结合使用 为常见事件(例如用户想退出您的 Action,或用户 输入超时。如需创建系统 intent,请执行以下操作:

- 在 Develop 标签页中,点击 System intent。一组系统 intent 例如 NO_MATCH、NO_INPUT 和 CANCEL。

- 每个系统 intent 都包含自己的处理程序,您可以根据 每种类型的系统 intent。例如,您可以通过系统 intent 网络钩子事件,并在事件发生时发送静态提示。

创建自定义类型

借助自定义类型,你可以创建自己的类型规范,以便训练 NLU 进行 了解应映射到单个键的一组值。

<ph type="x-smartling-placeholder">

要创建自定义类型,请执行以下操作:

- 在 Develop 标签页中,点击 Types >&CirclePlus;(新类型)。

在此 type support?部分:

- 字词和同义词允许您将多个值映射到单个键,

称为条目您的类型可以包含一个或多个条目。如果您

选择此选项,还可以启用以下 NLU 设置:

<ph type="x-smartling-placeholder">

- </ph>

- 启用模糊匹配 - 此选项允许包含多个字词的条目 即使字词的发音顺序不同也是如此。

- 接受未知值 - 当您不能指定所有可能的值时, 它会基于周围的输入接受未知的字词或短语, 意图训练数据,例如可能会添加到杂货店的物品 列表。

- 正则表达式可让类型与正则表达式模式进行匹配 基于 Google 的 RE2 标准。

- 自由格式文本:允许文字与用户所说的任何内容相匹配。

- 字词和同义词允许您将多个值映射到单个键,

称为条目您的类型可以包含一个或多个条目。如果您

选择此选项,还可以启用以下 NLU 设置:

<ph type="x-smartling-placeholder">

在添加条目部分指定类型值。如果您选择自由形式 text,您输入的内容将匹配任何文本,因此您无需提供任何条目。

构建操作逻辑和响应

Google 助理 NLU 将用户请求与 intent 进行匹配,以便你的 Action 可以 在场景中进行处理。场景是强大的逻辑执行器 在对话期间处理事件。

<ph type="x-smartling-placeholder">

创建场景

以下部分介绍了如何创建场景和定义功能 每个场景的生命周期阶段专用。

如需创建场景,请执行以下操作:

- 在 Develop 标签页中,点击 Scenes >&CirclePlus;(新场景)、 指定名称,然后按 Enter 创建场景。

- 在左侧菜单中点击新创建的场景。场景编辑器 。

定义一次性设置

当一个场景首次变为活动状态时,您可以在 进入进入阶段。On Enter 阶段仅执行一次,并且是唯一的 场景的执行循环内运行。

在场景中,点击进入时阶段以指定其功能。 您可以在此阶段指定以下功能:

。 <ph type="x-smartling-placeholder"></ph>

图 4.进入场景的进入场景示例

检查条件

设置条件后,您可以检查槽填充、会话存储空间、用户存储空间和 主屏幕存储参数来控制场景执行流程。

在场景中,点击 Condition 阶段的 + 图标。通过 条件编辑器会显示在右侧。您可以指定以下内容 功能:

- 条件 - 指定作为逻辑基础的实际条件语句 的如需了解语法,请参阅条件文档 信息。

定义槽填充

借助槽,您可以从用户输入中提取类型化参数。

在场景编辑器中,点击槽填充阶段对应的 + 图标。通过 该广告位编辑器会显示在右侧。您可以指定以下内容 广告位的属性:

- 广告位名称:指定广告位的名称。如果您想利用 请使用与广告位值映射相同的名称, 相应的 intent 参数。

- 槽类型:通过系统指定槽的类型 类型或自定义类型。

- 此槽位为必需槽位:将此槽位标记为必需。启用后, 相应广告位填充后,系统才会填充完毕。

- 为此广告位分配默认值:为广告位指定默认值 从指定会话参数中读取的值。

- 自定义槽值回写:指定要保留的会话参数 广告位填充完成后显示广告位的值。

- 槽验证:在槽填充时触发网络钩子。此设置 适用于所有广告位。

- Call your webhook(在需要槽时启用):触发 webhook。 请参阅网络钩子文档,详细了解 webhook。

- 发送提示(在需要槽时启用):指定静态提示 发送给用户,以便他们知道如何继续对话。请参阅 提示文档,详细了解如何指定 提示。

对于特定的广告位类型(例如与交易或用户互动相关的广告位), 系统会显示一个附加部分,供您配置插槽。广告位 更改用户的对话体验,具体取决于 属性。

要配置广告位,请在以下位置以 JSON 对象的形式提供属性:

fulfillment(作为会话参数引用)或内嵌 JSON 编辑器。

您可以在

Actions Builder JSON 参考文档。例如,actions.type.DeliveryAddressValue

广告位类型对应于

DeliveryAddressValue

。

槽值映射

在许多情况下,之前的意图匹配可能包含部分匹配或 会完全填充相应场景的槽位值。在这些情况下 由 intent 参数填充的槽位会映射到场景的槽位填充项(如果槽位名称为 与 intent 参数名称匹配。

例如,如果用户与订购饮料的 intent 匹配,说出“我想 “订购大杯香草咖啡”、有关大小、口味和饮料的现有广告位 类型会被视为已填充相应场景(如果该场景定义了相同的) 。

处理输入

在此阶段,您可以让 Google 助理 NLU 将用户输入与意图匹配。 您可以通过添加所需的 intent,将 intent 匹配限定为特定场景。 场景。这样,您就可以告诉 Google 助理,从而控制对话流程 以在特定场景处于活跃状态时匹配特定 intent。

在场景中,点击 User intent 处理 对应的 + 图标,或 系统 intent 处理阶段。此时会显示 intent 处理程序的编辑器 。您可以指定 intent 的以下功能 处理程序: