Choose a natural language processing function

This document provides a comparison of the natural language processing functions

available in BigQuery ML, which are

ML.GENERATE_TEXT,

ML.TRANSLATE,

and

ML.UNDERSTAND_TEXT.

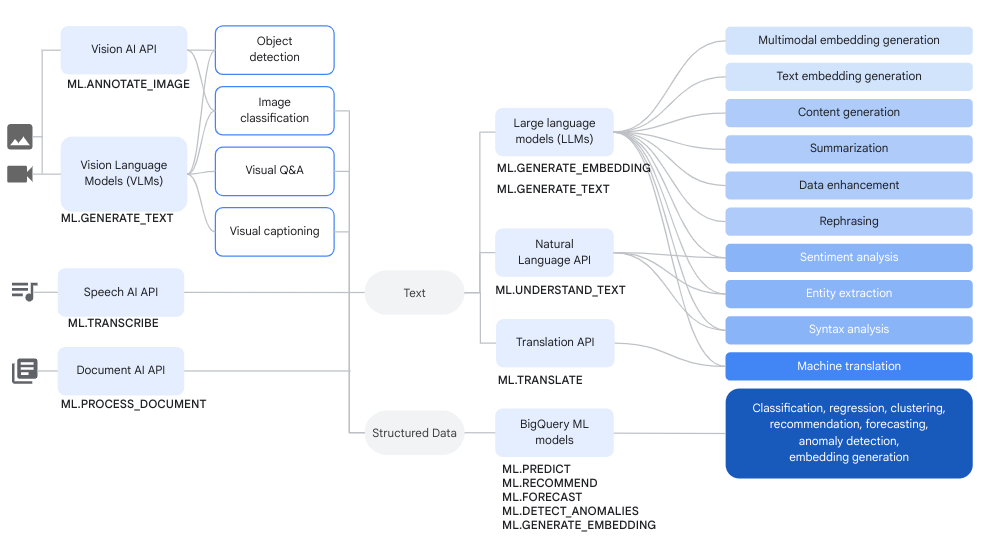

ML.GENERATE_TEXT can do tasks that the other two functions can do as well, as

shown in the following image:

You can use the information in this document to help you decide which function to use in cases where the functions have overlapping capabilities.

At a high level, the difference between these functions is as follows:

ML.GENERATE_TEXTis a good choice for performing customized natural language processing (NLP) tasks at a lower cost. This function offers more language support, faster throughput, and model tuning capability, and also works with multimodal models.ML.TRANSLATEis a good choice for performing translation-specific NLP tasks where you need to support a high rate of queries per minute.ML.UNDERSTAND_TEXTis a good choice for performing NLP tasks supported by the Cloud Natural Language API.

Supported models

Supported models are as follows:

ML.GENERATE_TEXT: you can use a subset of the Vertex AI Gemini or PaLM model to generate text. For more information on supported models, see theML.GENERATE_TEXTsyntax.ML.TRANSLATE: you use the default model of the Cloud Translation API.ML.UNDERSTAND_TEXT: you use the default model of the Cloud Natural Language API.

Supported tasks

Supported tasks are as follows:

ML.GENERATE_TEXT: you can perform any NLP task. What task the model performs is based on the prompt you specify. For example, to perform a question answering task, you could provide a prompt similar toCONCAT("What are the key concepts in the following article?: ", article_text). You can also provide context as part of the prompt. For example,CONCAT("context: Only output 'yes' or 'no' to the following question: ", question).ML.TRANSLATE: you can perform the following tasks:ML.UNDERSTAND_TEXT: you can perform the following tasks:

Data context

When choosing what function to use, consider whether your data can be analyzed

in isolation, or whether it requires additional context to support the analysis.

If your data requires additional context, using ML.GENERATE_TEXT with a

Vertex AI model is a better choice, as those models allow you to

provide context as part of the prompt you submit. Keep in

mind that providing additional context as input increases token count and cost.

If your data can be analyzed without other context being considered by the

model—for example, translating a string of text without knowing why it was

written, then using ML.TRANSLATE or ML.UNDERSTAND_TEXT might be a better

choice, provided that the task you want to perform is supported.

Output structure

ML.GENERATE_TEXT consistently returns results in the

ml_generate_text_llm_result output column. You can also

use your prompt to define your output structure. For example, you can instruct

the model to return your result as JSON with custom parent and child fields,

and provide an example of how to produce this result.

ML.TRANSLATE and ML.UNDERSTAND_TEXT produce the same output for a given

task type for each successful call to the API. Additionally, the output from

these functions includes additional metadata about their results. For example,

ML.TRANSLATE output includes information about the input language, and

ML.UNDERSTAND_TEXT output includes information about the magnitude of the

sentiment for sentiment analysis tasks. Generating this metadata is possible

with the Vertex AI models, but this requires significant prompt

engineering, and isn't likely to provide the same granularity.

Pricing

Pricing is as follows:

ML.GENERATE_TEXT: $0.00025 per 1000 characters for Palm2 models, and $0.000375 per 1000 characters for Gemini 1.0 models. Supervised tuning of supported models is charged at dollars per node hour at Vertex AI custom training pricing. For more information, see Vertex AI pricing.ML.TRANSLATE: $0.02 per 1000 characters. For more information, see Cloud Translation API pricing.ML.UNDERSTAND_TEXT: starts at $0.001 per 1000 characters, depending on the task type and your usage. For more information, see Cloud Natural Language API pricing.

Model training

Custom training support is as follows:

ML.GENERATE_TEXT: supervised tuning is supported for some models.ML.TRANSLATE: custom training isn't supported.ML.UNDERSTAND_TEXT: custom training isn't supported.

Multimodality

Multimodality support is as follows:

ML.GENERATE_TEXT: supports text and text + image input.ML.TRANSLATE: supports text input.ML.UNDERSTAND_TEXT: supports text input.

Queries per minute (QPM) limit

QPM limits are as follows:

ML.GENERATE_TEXT: ranges from a 100 to a 1,600 QPM limit in the defaultus-central1region, depending on the model used.ML.TRANSLATE: 6,000 QPM limit for v3.ML.UNDERSTAND_TEXT: 600 QPM limit.

Token limit

Token limits are as follows:

ML.GENERATE_TEXT: ranges from a 8,192 to a 24,576 token limit, depending on the model used.ML.TRANSLATE: No token limit. However, this function does have a 30,000 bytes limit.ML.UNDERSTAND_TEXT: 100,000 token limit.

Supported languages

Supported languages are as follows:

ML.GENERATE_TEXT: supports Generative AI for Vertex AI languages.ML.TRANSLATE: supports Cloud Translation API languages.ML.UNDERSTAND_TEXT: supports Cloud Natural Language API languages.

Region availability

Region availability is as follows:

ML.GENERATE_TEXT: available in all Generative AI for Vertex AI regions.ML.TRANSLATE: available in theEUandUSmulti-regions.ML.UNDERSTAND_TEXT: available in theEUandUSmulti-regions.