本頁面會針對「決策樹」單元中討論的內容,提供一系列複選題練習,供您挑戰。

第 1 題

決策樹的推論會透過路由化為例...

從葉子到根。

所有推論都會從根節點 (第一個條件) 開始。

從一個葉子傳遞到另一個葉子。

所有推論都從根節點開始,而非從葉節點開始。

從根到葉。

非常好!

第 2 題

所有條件是否都只涉及單一功能?

是。

斜向功能可測試多項功能。

不會。

雖然與軸對齊的條件只涉及單一地圖項目,但斜向條件則涉及多個地圖項目。

第 3 題

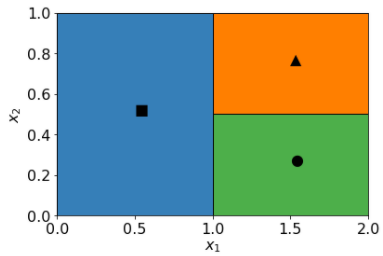

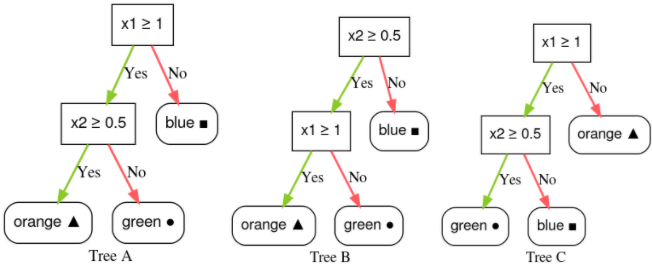

請考慮以下兩個特徵 x1 和 x2 的預測地圖:

下列哪個決策樹與預測地圖相符?

決策樹 A。

太棒了!

決策樹 B.

如果條件 x2 ≥ 0.5 為否,則葉子可能或不一定是藍色,因此這是不良條件。

決策樹 C.

如果 x1 不大於或等於 1.0,則葉片應為「藍色」而非「橘色」,因此這是錯誤的葉片。