决策森林模型由决策树组成。决策森林学习算法(例如随机森林)至少在部分上依赖于决策树的学习。

在本课程的这一部分中,您将研究一个小型示例数据集,并了解如何训练单个决策树。在下一部分中,您将了解如何组合决策树来训练决策森林。

在 YDF 中,使用 CART 学习器训练各个决策树模型:

# https://ydf.readthedocs.io/en/latest/py_api/CartLearner import ydf model = ydf.CartLearner(label="my_label").train(dataset)

模型

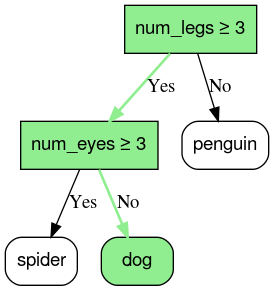

决策树是一种由一系列以树形结构分层排列的“问题”组成的模型。这些问题通常称为条件、分块或测试。我们将在此类中使用“条件”一词。每个非叶节点都包含一个条件,每个叶节点都包含一个预测结果。

植物树通常是从根部向下生长;然而,决策树通常是从顶部的根(第一个节点)向下生长。

图 1. 一个简单的分类决策树。绿色图例不属于决策树的一部分。

决策树模型的推理是通过根据条件将示例从根节点(位于顶部)路由到某个叶节点(位于底部)来计算得出的。所到达叶子的值就是决策树的预测结果。访问过的节点集称为推理路径。例如,请考虑以下地图项值:

| num_legs | num_eyes |

|---|---|

| 4 | 2 |

预测结果为狗。推理路径将如下所示:

- num_legs ≥ 3 → 是

- num_eyes ≥ 3 → 否

图 2. 在示例 *{num_legs : 4, num_eyes : 2}* 中,以叶节点 *dog* 为终点的推理路径。

在前面的示例中,决策树的叶子包含分类预测;也就是说,每个叶子都包含一组可能的物种中的一种动物物种。

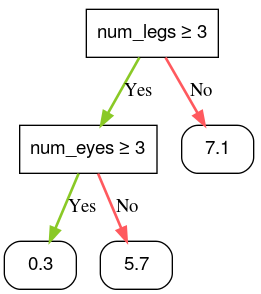

同样,决策树可以通过使用回归预测(数值)标记叶子来预测数值。例如,以下决策树会预测动物的可爱程度得分(介于 0 到 10 之间)。

图 3. 用于进行数值预测的决策树。