這是牛。

圖 19. 一頭牛。

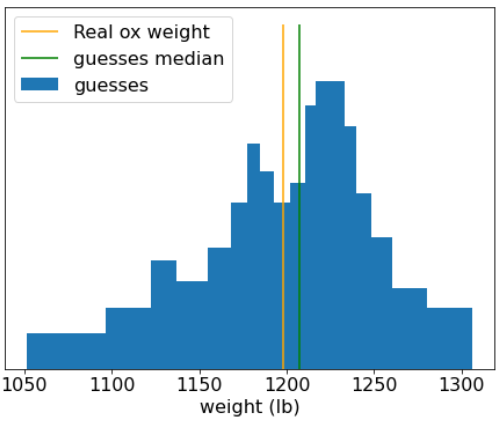

1906 年,在英國舉辦了重量評估競賽。787 位參與者猜測牛的體重。個別猜測值的誤差中位數為 37 磅 (誤差 3.1%)。不過,猜測結果的整體中位數與牛的實際重量 (1,198 磅) 相差只有 9 磅,誤差率只有 0.7%。

圖 20. 個別體重猜測值的直方圖。

這個軼事說明瞭「群眾智慧」:在某些情況下,集體意見可提供非常明智的判斷。

從數學角度來看,群眾智慧可透過中心極限定理建立模型:簡單來說,某個值與該值的 N 個雜訊估計值平均值之間的平方誤差,會隨著 1/N 因子趨近於零。不過,如果變數不獨立,變異數就會增加。

在機器學習中,集成是指一組模型,這些模型的預測結果會經過平均 (或以某種方式匯總)。如果集成模型彼此之間的差異足夠大,且個別模型的品質並未太差,集成模型的品質通常會優於個別模型。集成模型需要的訓練和推論時間,比單一模型還要長。畢竟您必須對多個模型 (而非單一模型) 執行訓練和推論。

非正式來說,為了讓集成模型發揮最佳效能,個別模型應保持獨立。舉例來說,由 10 個完全相同的模型組成的集成模型 (也就是完全不獨立) 不會比個別模型好。另一方面,強制讓模型保持獨立可能會讓模型變得更糟。要有效地進行集成,就必須在模型獨立性和子模型品質之間取得平衡。