与随机森林不同,梯度提升树可能会过拟合。因此,对于神经网络,您可以使用验证数据集应用正则化和提前停止。

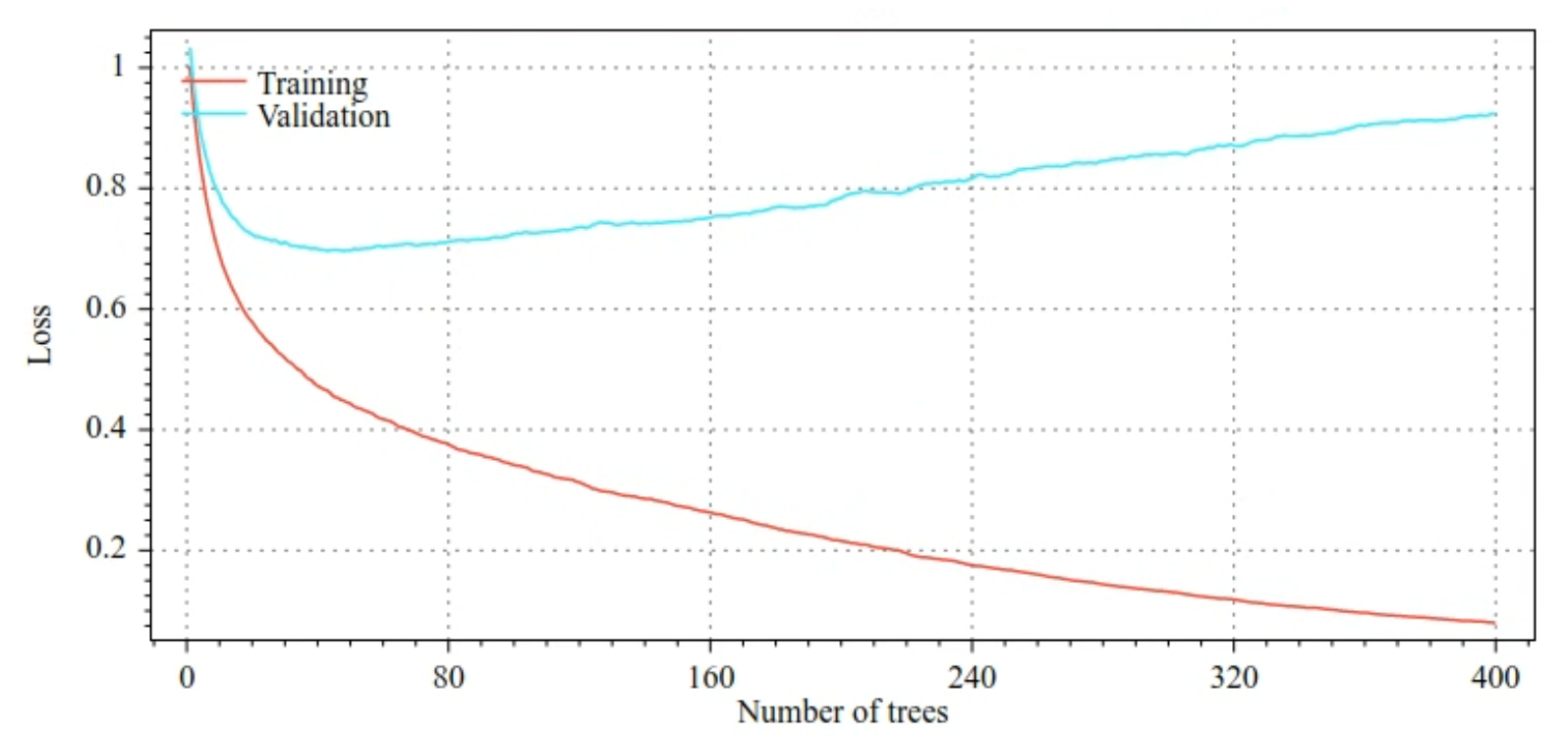

例如,以下图显示了训练 GBT 模型时训练集和验证集的损失和准确率曲线。请注意曲线的差异程度,这表明过拟合程度较高。

图 29. 损失与决策树数量的关系。

图 30. 准确率与决策树数量。

梯度提升树的常见正则化参数包括:

- 树的最大深度。

- 收缩率。

- 每个节点测试的属性的比例。

- 损失函数的 L1 和 L2 系数。

请注意,决策树通常比随机森林模型要浅得多。默认情况下,TF-DF 中的梯度提升树会生长到深度 6。 由于树的深度较浅,因此每个叶子节点的最小示例数影响不大,通常不需要进行调整。

当训练示例数量较少时,需要验证数据集是一个问题。因此,通常是在交叉验证循环中训练梯度提升树,或者在已知模型不会过拟合时停用提前停止。

用法示例

在上一章中,我们对一个小型数据集训练了随机森林。在此示例中,我们只需将随机森林模型替换为梯度提升树模型即可:

model = tfdf.keras.GradientBoostedTreesModel()

# Part of the training dataset will be used as validation (and removed

# from training).

model.fit(tf_train_dataset)

# The user provides the validation dataset.

model.fit(tf_train_dataset, validation_data=tf_valid_dataset)

# Disable early stopping and the validation dataset. All the examples are

# used for training.

model.fit(

tf_train_dataset,

validation_ratio=0.0,

early_stopping="NONE")

# Note: When "validation_ratio=0", early stopping is automatically disabled,

# so early_stopping="NONE" is redundant here.

使用和限制

梯度提升树有一定的优缺点。

优点

- 与决策树一样,它们原生支持数值特征和分类特征,通常不需要特征预处理。

- 梯度提升树具有默认超参数,通常可提供出色的结果。不过,调整这些超参数可以显著改进模型。

- 梯度提升树模型通常体积较小(节点数和内存用量较小),并且运行速度较快(通常只需 1 到 2 微秒即可处理一个示例)。

缺点

- 决策树必须按顺序进行训练,这可能会大大减慢训练速度。不过,决策树较小,这在一定程度上抵消了训练速度放慢的影响。

- 与随机森林一样,梯度提升树无法学习和重复使用内部表示法。每个决策树(以及每个决策树的每个分支)都必须重新学习数据集模式。在某些数据集中(尤其是包含非结构化数据 [例如图片、文本] 的数据集),这会导致梯度提升树的结果不如其他方法。