PaLM 2 的构建和评估方式

How PaLM 2 was built and evaluated

构建 PaLM 2

Building PaLM 2

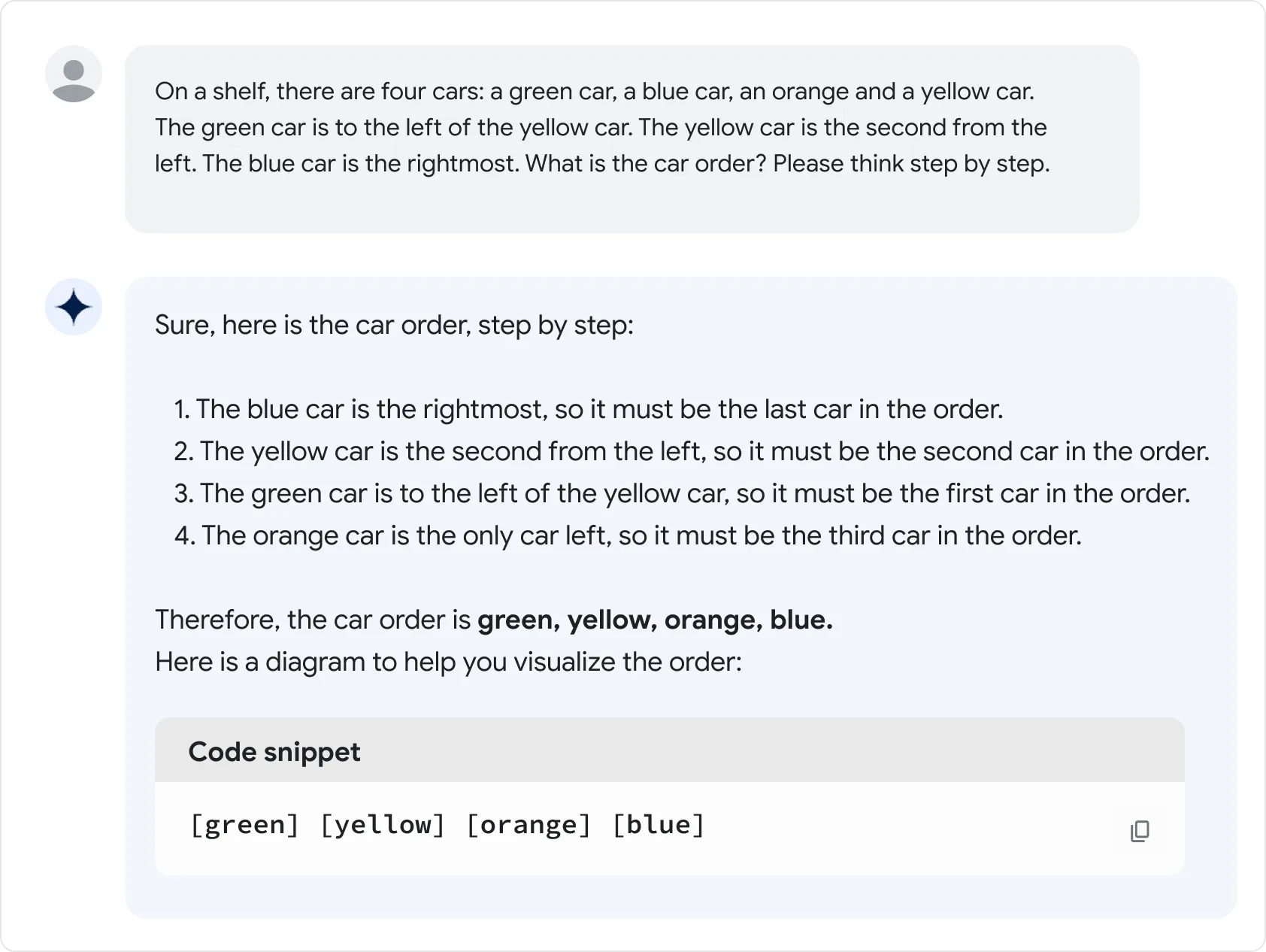

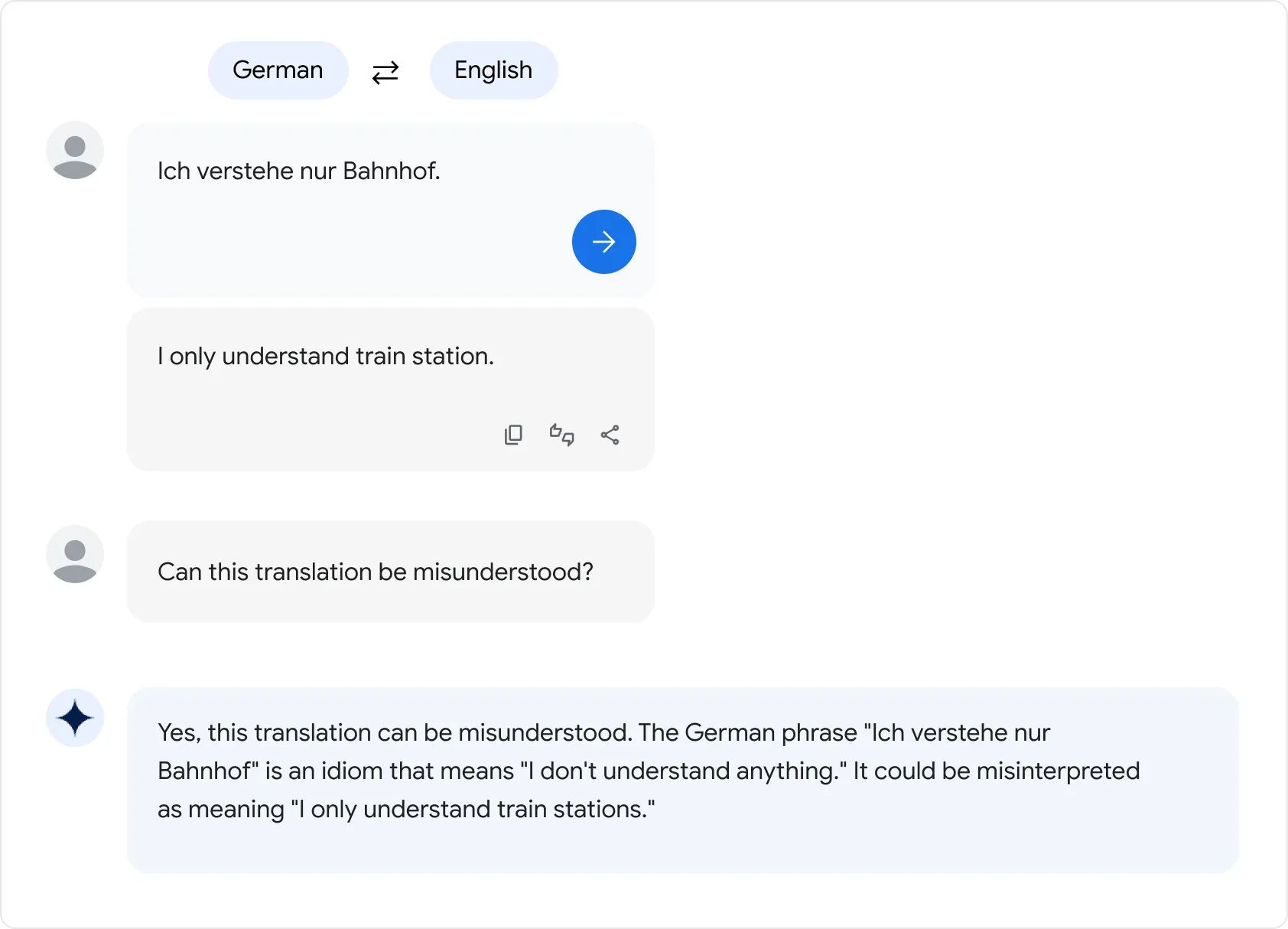

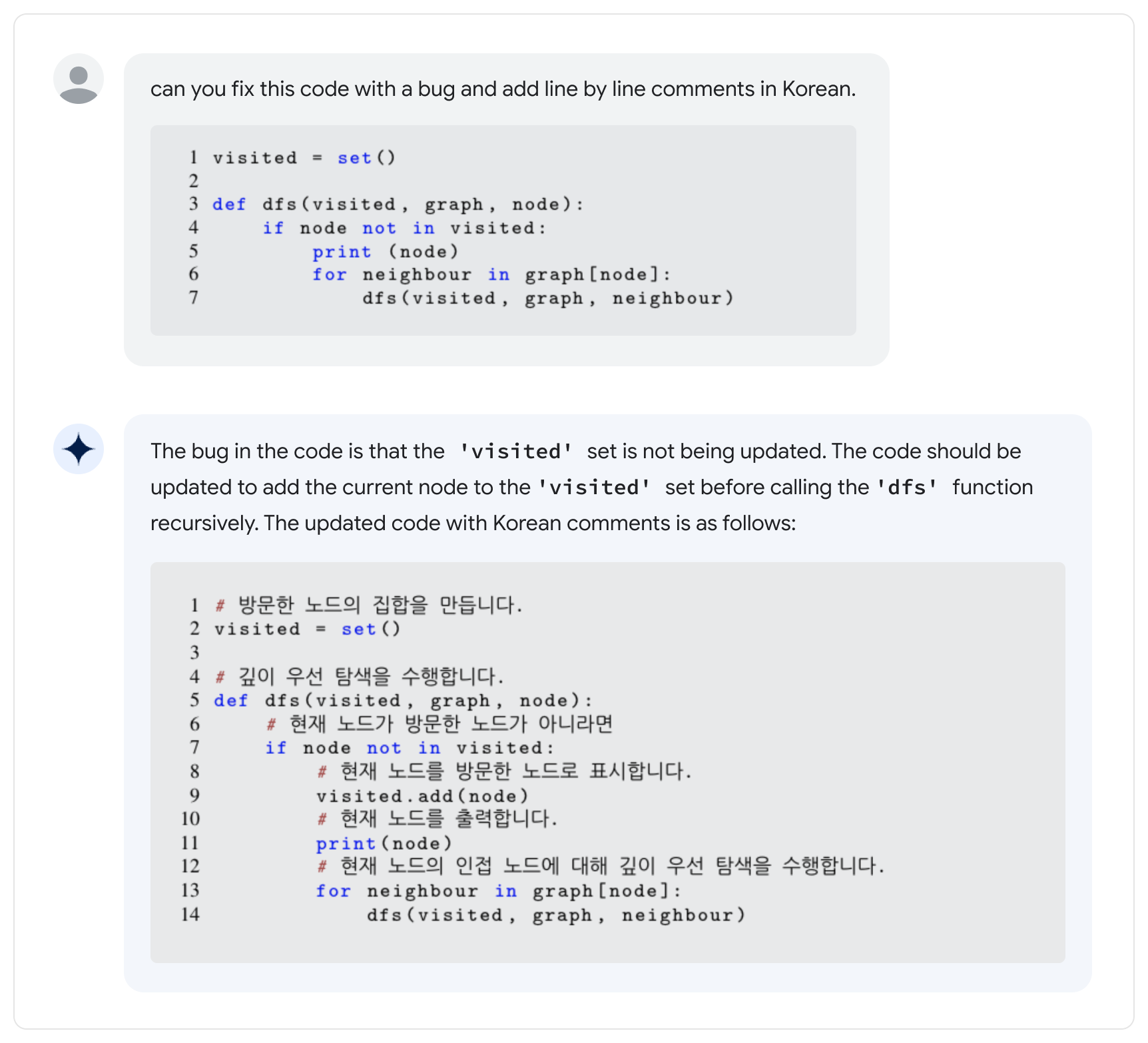

PaLM 2 excels at tasks like advanced reasoning, translation, and code generation because of how it was built. It improves upon its predecessor, PaLM, by unifying three distinct research advancements in large language models:

PaLM 2 能轻车熟路地完成高级推理、翻译和代码生成等任务,这得益于它的构建方式。青出于蓝而胜于蓝,PaLM 2 对前身 PaLM 的超越主要体现在,它整合了大语言模型领域中三项截然不同的研究成果:

- Use of compute-optimal scaling: The basic idea of compute-optimal scaling is to scale the model size and the training dataset size in proportion to each other. This new technique makes PaLM 2 smaller than PaLM, but more efficient with overall better performance, including faster inference, fewer parameters to serve, and a lower serving cost.

- Improved dataset mixture: Previous LLMs, like PaLM, used pre-training datasets that were mostly English-only text. PaLM 2 improves on its corpus with a more multilingual and diverse pre-training mixture, which includes hundreds of human and programming languages, mathematical equations, scientific papers, and web pages.

- Updated model architecture and objective: PaLM 2 has an improved architecture. PaLM 2 and its latest version were trained on a variety of different tasks, all of which helps PaLM 2 learn different aspects of language.

- 计算优化型扩缩技术: 这项技术的基本理念是,让模型规模和训练数据集规模以互成比例的方式扩缩。有了这项新技术的加持,PaLM 2 比 PaLM 更小但更高效,整体性能也更卓越,包括推理速度更快、参数响应量更少以及响应成本更低。

- 更完善的混合数据集: 之前的 LLM(例如 PaLM)使用的预训练数据集大多是纯英语文本。相比之下,PaLM 2 的预训练语料库采用了语言种类更多的多样化混合数据集,包括数百种人类语言和编程语言、数学方程式、科学论文和网页。

- 更新后的模型架构和目标: PaLM 2 具有经过改进的架构。PaLM 2 及其最新版本受过各种不同任务的训练,让 PaLM 2 得以学习涉及语言的各方面知识。