Page Summary

-

Embeddings are low-dimensional representations of high-dimensional data, often used to capture semantic relationships between items.

-

Embeddings place similar items closer together in the embedding space, allowing for efficient machine learning on large datasets.

-

The distance between points in an embedding space represents the relative similarity between the corresponding items.

-

Real-world embeddings can encode complex relationships, like those between countries and their capitals, allowing models to detect patterns.

-

Static embeddings like word2vec represent all meanings of a word with a single point, which can be a limitation in some cases.

An embedding is a vector representation of data in embedding space. Generally speaking, a model finds potential embeddings by projecting the high-dimensional space of initial data vectors into a lower-dimensional space. For a discussion of high-dimensional versus low-dimensional data, see the Categorical Data module.

Embeddings make it easier to do machine learning on large feature vectors, such as the sparse vectors representing meal items discussed in the previous section. Sometimes the relative positions of items in embedding space have a potential semantic relationship, but often the process of finding a lower-dimensional space, and relative positions in that space, is not interpretable by humans, and the resulting embeddings are difficult to understand.

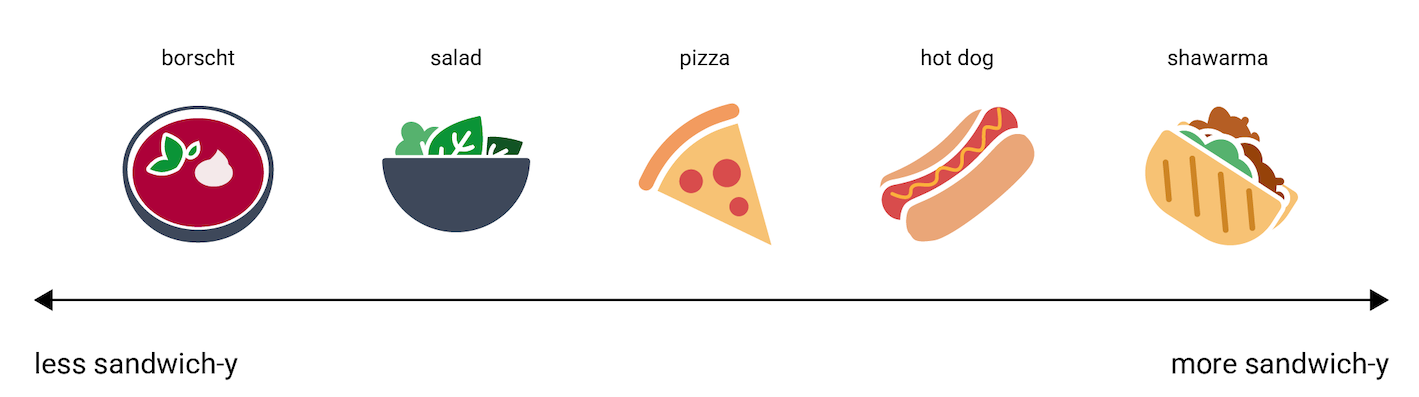

Still, for the sake of human understanding, to give an idea of how embedding vectors represent information, consider the following one-dimensional representation of the dishes hot dog, pizza, salad, shawarma, and borscht, on a scale of "least like a sandwich" to "most like a sandwich." The single dimension is an imaginary measure of "sandwichness."

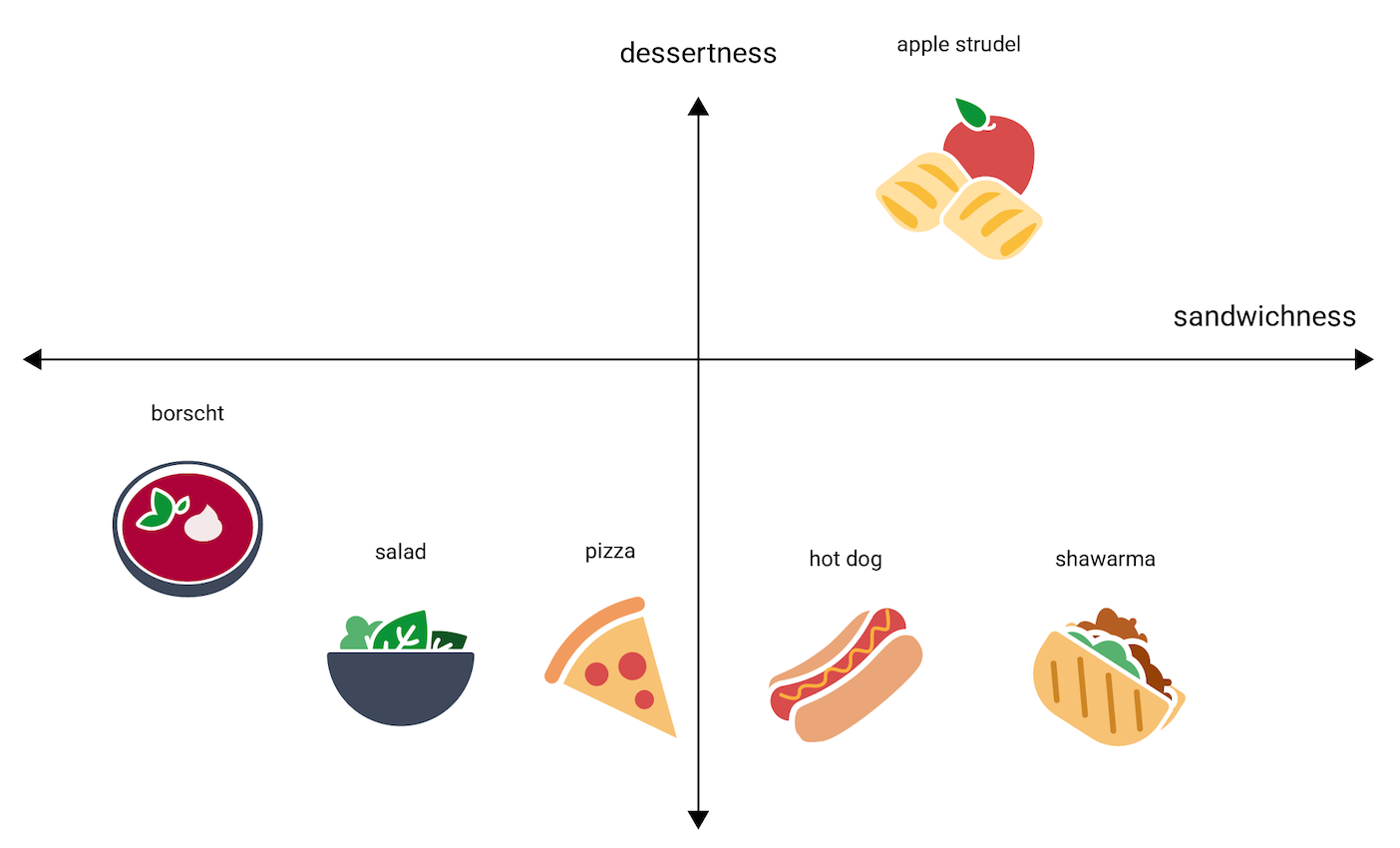

Where on this line would an

apple strudel

fall? Arguably, it could be placed between hot dog and shawarma. But apple

strudel also seems to have an additional dimension of sweetness

or dessertness that makes it very different from the other options.

The following figure visualizes this by adding a "dessertness" dimension:

An embedding represents each item in n-dimensional space with n floating-point numbers (typically in the range –1 to 1 or 0 to 1). The embedding in Figure 3 represents each food in one-dimensional space with a single coordinate, while Figure 4 represents each food in two-dimensional space with two coordinates. In Figure 4, "apple strudel" is in the upper-right quadrant of the graph and could be assigned the point (0.5, 0.3), whereas "hot dog" is in the bottom-right quadrant of the graph and could be assigned the point (0.2, –0.5).

In an embedding, the distance between any two items can be calculated

mathematically, and can be interpreted as a measure of relative similarity

between those two items. Two things that are close to each other, like

shawarma and hot dog in Figure 4, are more closely related in the model's

representation of the data than two things more distant from each

other, like apple strudel and borscht.

Notice also that in the 2D space in Figure 4, apple strudel is much farther

from shawarma and hot dog than it would be in the 1D space, which matches

intuition: apple strudel is not as similar to a hot dog or a shawarma as hot

dogs and shawarmas are to each other.

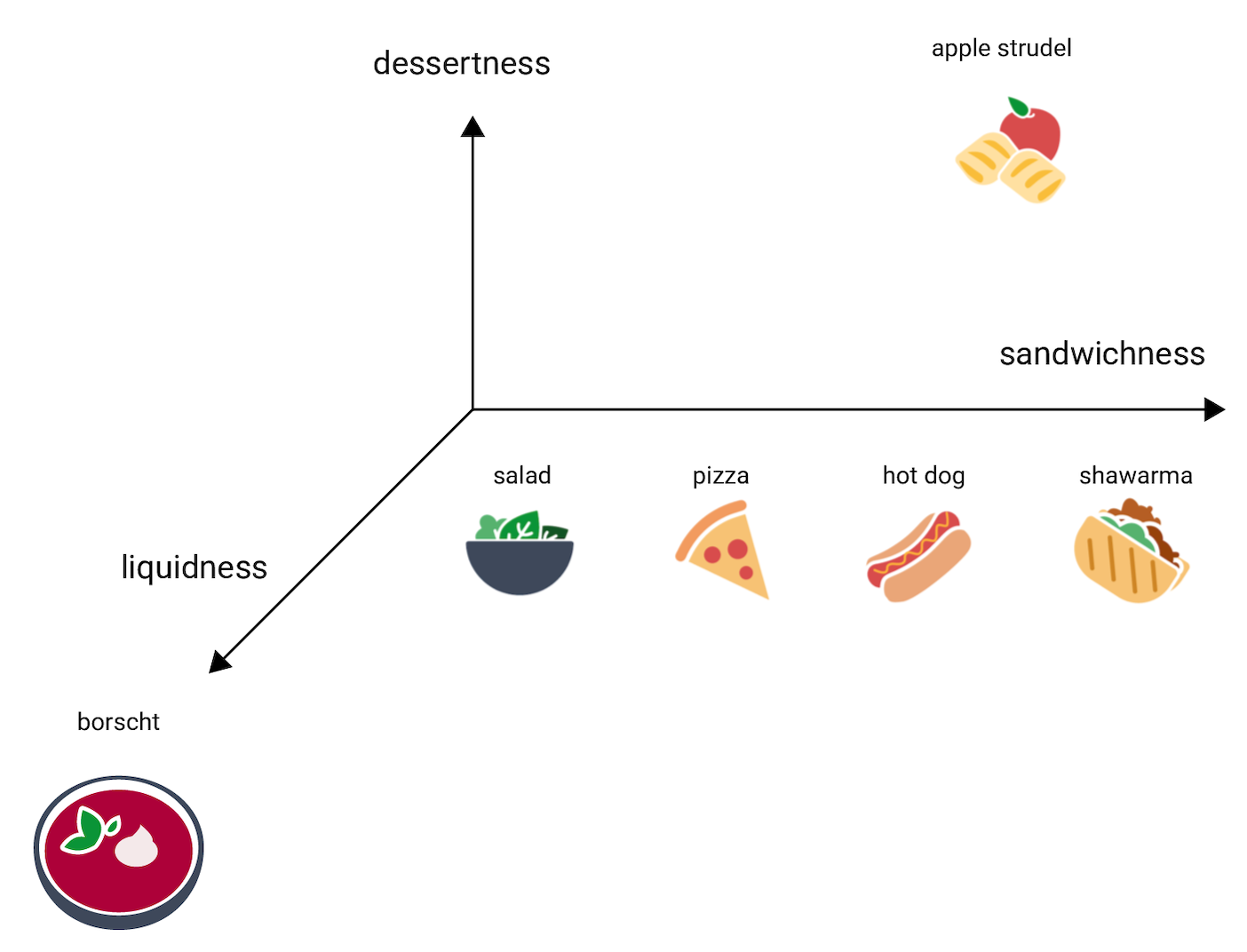

Now consider borscht, which is much more liquid than the other items. This suggests a third dimension, liquidness, or how liquid a food might be. Adding that dimension, the items could be visualized in 3D in this way:

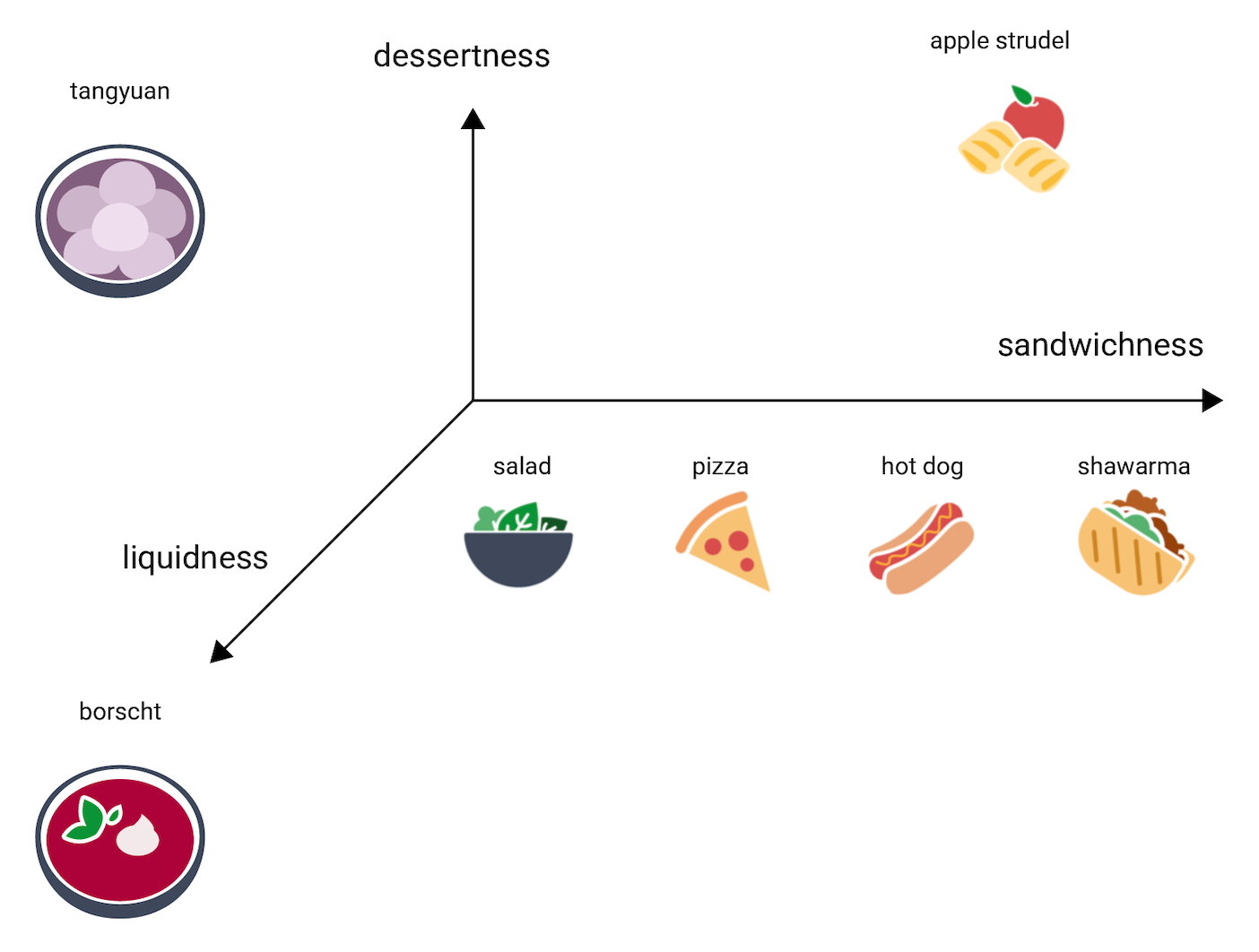

Where in this 3D space would tangyuan go? It's soupy, like borscht, and a sweet dessert, like apple strudel, and most definitely not a sandwich. Here is one possible placement:

Notice how much information is expressed in these three dimensions. You could imagine adding additional dimensions, like how meaty or baked a food might be, though 4D, 5D, and higher-dimensional spaces are difficult to visualize.

Real-world embedding spaces

In the real world, embedding spaces are d-dimensional, where d is much higher than 3, though lower than the dimensionality of the data, and relationships between data points are not necessarily as intuitive as in the contrived illustration above. (For word embeddings, d is often 256, 512, or 1024.1)

In practice, the ML practitioner usually sets the specific task and the number of embedding dimensions. The model then tries to arrange the training examples to be close in an embedding space with the specified number of dimensions, or tunes for the number of dimensions, if d is not fixed. The individual dimensions are rarely as understandable as "dessertness" or "liquidness." Sometimes what they "mean" can be inferred but this is not always the case.

Embeddings will usually be specific to the task, and differ from each other when the task differs. For example, the embeddings generated by a vegetarian versus non-vegetarian classification model will be different from the embeddings generated by a model that suggests dishes based on time of day or season. "Cereal" and "breakfast sausage" would probably be close together in the embedding space of a time-of-day model but far apart in the embedding space of a vegetarian versus non-vegetarian model, for example.

Static embeddings

While embeddings differ from task to task, one task has some general applicability: predicting the context of a word. Models trained to predict the context of a word assume that words appearing in similar contexts are semantically related. For example, training data that includes the sentences "They rode a burro down into the Grand Canyon" and "They rode a horse down into the canyon" suggests that "horse" appears in similar contexts to "burro." It turns out that embeddings based on semantic similarity work well for many general language tasks.

While it's an older example, and largely superseded by other models, the

word2vec model remains useful for illustration. word2vec trains on a

corpus of documents to obtain a single

global embedding per word. When each word or data point has a single embedding

vector, this is called a static embedding. The following video walks

through a simplified illustration of word2vec training.

Research suggests that these static embeddings, once trained, encode some degree of semantic information, particularly in relationships between words. That is, words that are used in similar contexts will be closer to each other in embedding space. The specific embeddings vectors generated will depend on the corpus used for training. See T. Mikolov et al (2013), "Efficient estimation of word representations in vector space", for details.

-

François Chollet, Deep Learning with Python (Shelter Island, NY: Manning, 2017), 6.1.2. ↩