Page Summary

-

Inference involves using a trained model to make predictions on unlabeled examples, and it can be done statically or dynamically.

-

Static inference generates predictions in advance and caches them, making it suitable for scenarios where prediction speed is critical but limiting its ability to handle uncommon inputs.

-

Dynamic inference generates predictions on demand, offering flexibility for diverse inputs but potentially increasing latency and computational demands.

-

Choosing between static and dynamic inference depends on factors like model complexity, desired prediction speed, and the nature of the input data.

-

Static inference is advantageous when cost and prediction verification are prioritized, while dynamic inference excels in handling diverse, real-time predictions.

Inference is the process of making predictions by applying a trained model to unlabeled examples. Broadly speaking, a model can infer predictions in one of two ways:

- Static inference (also called offline inference or batch inference) means the model makes predictions on a bunch of common unlabeled examples and then caches those predictions somewhere.

- Dynamic inference (also called online inference or real-time inference) means that the model only makes predictions on demand, for example, when a client requests a prediction.

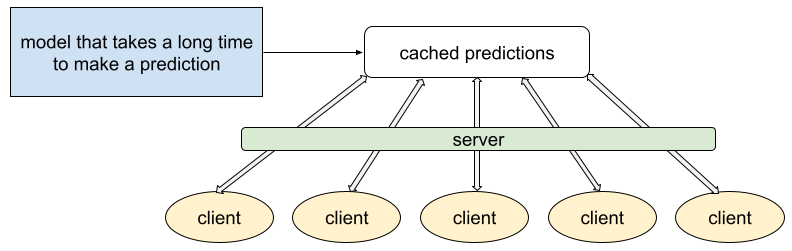

To use an extreme example, imagine a very complex model that takes one hour to infer a prediction. This would probably be an excellent situation for static inference:

Suppose this same complex model mistakenly uses dynamic inference instead of static inference. If many clients request predictions around the same time, most of them won't receive that prediction for hours or days.

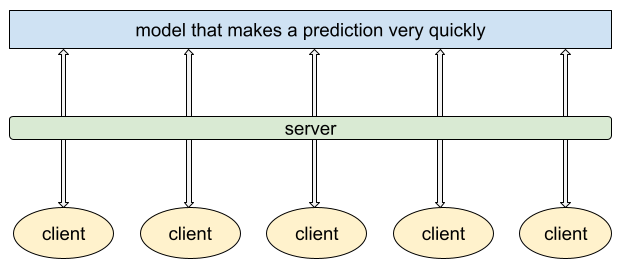

Now consider a model that infers quickly, perhaps in 2 milliseconds using a relative minimum of computational resources. In this situation, clients can receive predictions quickly and efficiently through dynamic inference, as suggested in Figure 5.

Static inference

Static inference offers certain advantages and disadvantages.

Advantages

- Don't need to worry much about cost of inference.

- Can do post-verification of predictions before pushing.

Disadvantages

- Can only serve cached predictions, so the system might not be able to serve predictions for uncommon input examples.

- Update latency is likely measured in hours or days.

Dynamic inference

Dynamic inference offers certain advantages and disadvantages.

Advantages

- Can infer a prediction on any new item as it comes in, which is great for long tail (less common) predictions.

Disadvantages

- Compute intensive and latency sensitive. This combination may limit model complexity; that is, you might have to build a simpler model that can infer predictions more quickly than a complex model could.

- Monitoring needs are more intensive.