了解如何在您自己的应用中使用增强人脸功能。

前提条件

确保您了解 AR 基础概念 以及如何在继续之前配置 ARCore 现场录像。

在 Android 中使用增强人脸

配置 ARCore 会话

在现有 ARCore 会话中选择前置摄像头,以开始使用增强人脸功能。请注意,选择前置摄像头 会导致许多更改 对 ARCore 行为的影响。

Java

// Set a camera configuration that usese the front-facing camera. CameraConfigFilter filter = new CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT); CameraConfig cameraConfig = session.getSupportedCameraConfigs(filter).get(0); session.setCameraConfig(cameraConfig);

Kotlin

// Set a camera configuration that usese the front-facing camera. val filter = CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT) val cameraConfig = session.getSupportedCameraConfigs(filter)[0] session.cameraConfig = cameraConfig

Java

Config config = new Config(session); config.setAugmentedFaceMode(Config.AugmentedFaceMode.MESH3D); session.configure(config);

Kotlin

val config = Config(session) config.augmentedFaceMode = Config.AugmentedFaceMode.MESH3D session.configure(config)

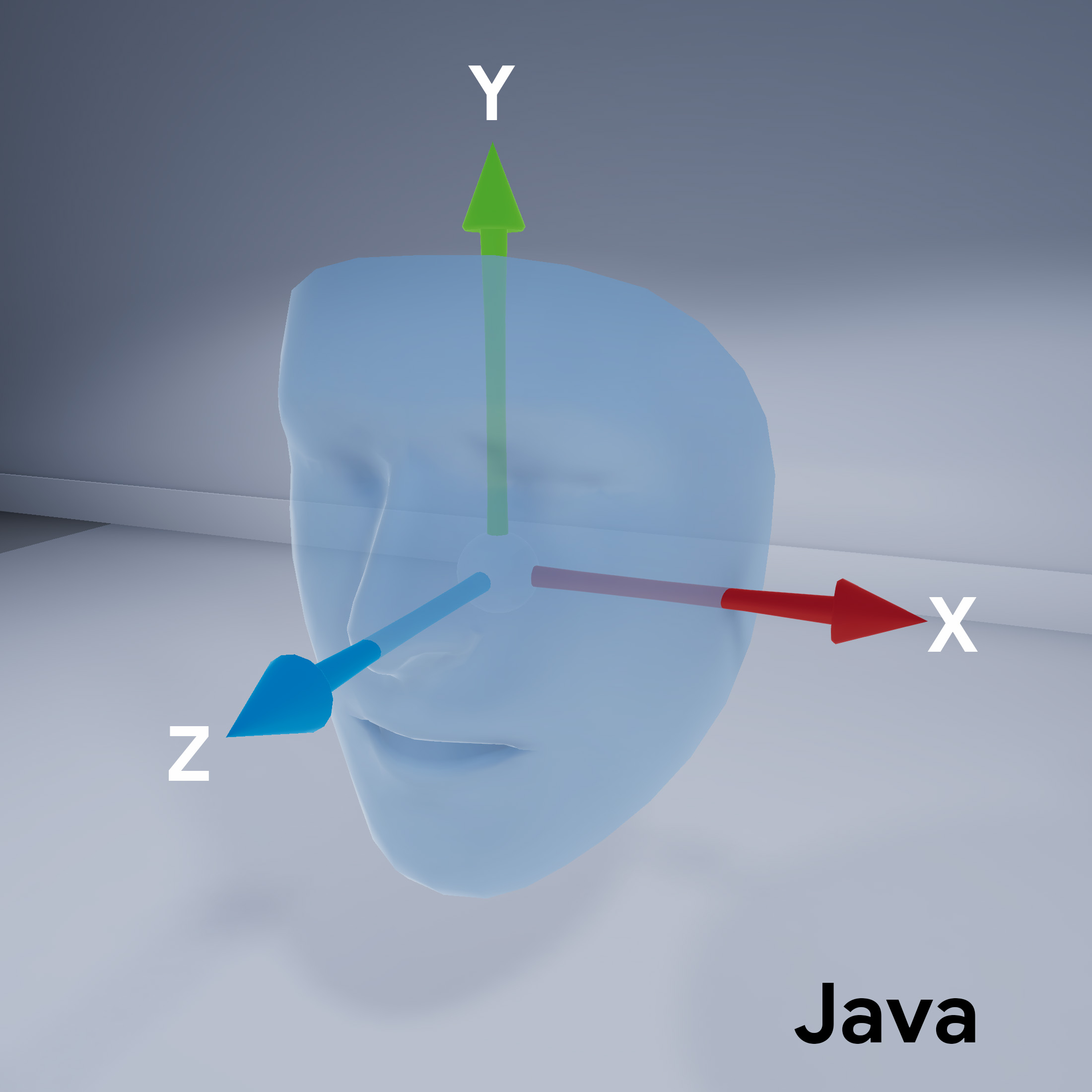

人脸网格方向

请注意面网格的方向:

访问检测到的面孔

获取 Trackable

每个帧。Trackable 是 ARCore 可以跟踪的内容,

可附加锚点。

Java

// ARCore's face detection works best on upright faces, relative to gravity. Collection<AugmentedFace> faces = session.getAllTrackables(AugmentedFace.class);

Kotlin

// ARCore's face detection works best on upright faces, relative to gravity. val faces = session.getAllTrackables(AugmentedFace::class.java)

获取 TrackingState

Trackable如果值为 TRACKING,

那么 ARCore 目前就可以知道其位置方向。

Java

for (AugmentedFace face : faces) { if (face.getTrackingState() == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. FloatBuffer uvs = face.getMeshTextureCoordinates(); ShortBuffer indices = face.getMeshTriangleIndices(); // Center and region poses, mesh vertices, and normals are updated each frame. Pose facePose = face.getCenterPose(); FloatBuffer faceVertices = face.getMeshVertices(); FloatBuffer faceNormals = face.getMeshNormals(); // Render the face using these values with OpenGL. } }

Kotlin

faces.forEach { face -> if (face.trackingState == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. val uvs = face.meshTextureCoordinates val indices = face.meshTriangleIndices // Center and region poses, mesh vertices, and normals are updated each frame. val facePose = face.centerPose val faceVertices = face.meshVertices val faceNormals = face.meshNormals // Render the face using these values with OpenGL. } }