Pelajari cara menggunakan fitur Wajah Augmented di aplikasi Anda sendiri.

Prasyarat

Pastikan Anda memahami konsep dasar AR dan cara mengonfigurasi sesi ARCore sebelum melanjutkan.

Menggunakan Wajah Augmented di Android

Mengonfigurasi sesi ARCore

Pilih kamera depan dalam sesi ARCore yang sudah ada untuk mulai menggunakan Wajah Augmented. Perhatikan bahwa memilih kamera depan akan menyebabkan sejumlah perubahan dalam perilaku ARCore.

Java

// Set a camera configuration that usese the front-facing camera. CameraConfigFilter filter = new CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT); CameraConfig cameraConfig = session.getSupportedCameraConfigs(filter).get(0); session.setCameraConfig(cameraConfig);

Kotlin

// Set a camera configuration that usese the front-facing camera. val filter = CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT) val cameraConfig = session.getSupportedCameraConfigs(filter)[0] session.cameraConfig = cameraConfig

Aktifkan AugmentedFaceMode:

Java

Config config = new Config(session); config.setAugmentedFaceMode(Config.AugmentedFaceMode.MESH3D); session.configure(config);

Kotlin

val config = Config(session) config.augmentedFaceMode = Config.AugmentedFaceMode.MESH3D session.configure(config)

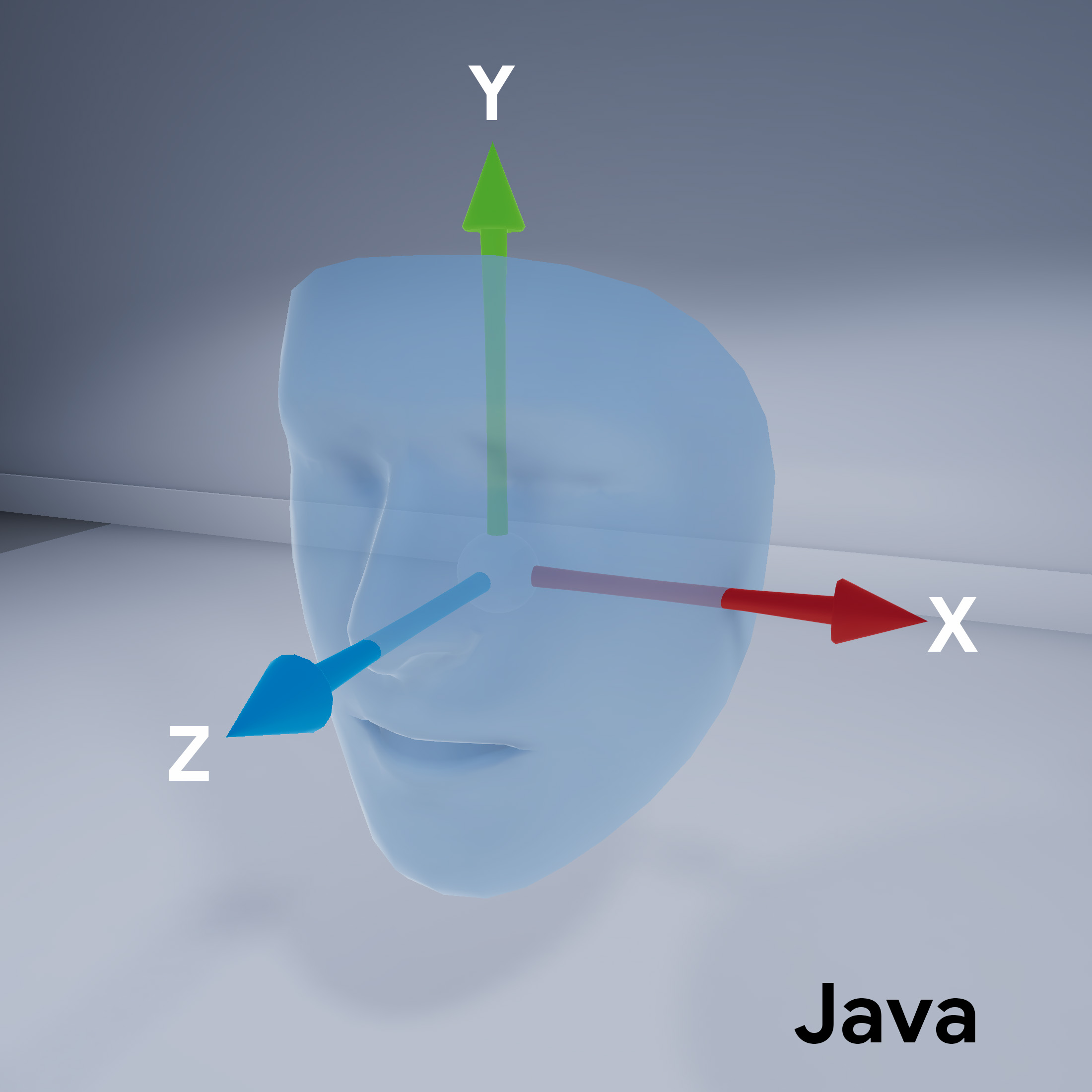

Orientasi mesh wajah

Perhatikan orientasi mesh wajah:

Mengakses wajah yang terdeteksi

Mendapatkan Trackable

untuk setiap {i>frame<i}. Trackable adalah sesuatu yang

dapat dilacak ARCore dan

Anchor dapat dipasang.

Java

// ARCore's face detection works best on upright faces, relative to gravity. Collection<AugmentedFace> faces = session.getAllTrackables(AugmentedFace.class);

Kotlin

// ARCore's face detection works best on upright faces, relative to gravity. val faces = session.getAllTrackables(AugmentedFace::class.java)

Mendapatkan TrackingState

untuk setiap Trackable. Jika nilainya TRACKING,

maka posenya saat ini

dikenal oleh ARCore.

Java

for (AugmentedFace face : faces) { if (face.getTrackingState() == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. FloatBuffer uvs = face.getMeshTextureCoordinates(); ShortBuffer indices = face.getMeshTriangleIndices(); // Center and region poses, mesh vertices, and normals are updated each frame. Pose facePose = face.getCenterPose(); FloatBuffer faceVertices = face.getMeshVertices(); FloatBuffer faceNormals = face.getMeshNormals(); // Render the face using these values with OpenGL. } }

Kotlin

faces.forEach { face -> if (face.trackingState == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. val uvs = face.meshTextureCoordinates val indices = face.meshTriangleIndices // Center and region poses, mesh vertices, and normals are updated each frame. val facePose = face.centerPose val faceVertices = face.meshVertices val faceNormals = face.meshNormals // Render the face using these values with OpenGL. } }