Dowiedz się, jak korzystać z funkcji Rozszerzonych twarzy we własnych aplikacjach.

Wymagania wstępne

Upewnij się, że znasz podstawowe pojęcia związane z AR. i dowiedz się, jak skonfigurować sesję ARCore, zanim przejdziesz dalej.

Korzystanie z powiększonych twarzy na Androidzie

Konfigurowanie sesji ARCore

Wybierz przedni aparat w istniejącej sesji ARCore, aby zacząć korzystać z funkcji rozpoznawania twarzy. Pamiętaj, że wybór przedniego aparatu spowoduje szereg zmian, w działaniu ARCore.

Java

// Set a camera configuration that usese the front-facing camera. CameraConfigFilter filter = new CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT); CameraConfig cameraConfig = session.getSupportedCameraConfigs(filter).get(0); session.setCameraConfig(cameraConfig);

Kotlin

// Set a camera configuration that usese the front-facing camera. val filter = CameraConfigFilter(session).setFacingDirection(CameraConfig.FacingDirection.FRONT) val cameraConfig = session.getSupportedCameraConfigs(filter)[0] session.cameraConfig = cameraConfig

Włączanie AugmentedFaceMode:

Java

Config config = new Config(session); config.setAugmentedFaceMode(Config.AugmentedFaceMode.MESH3D); session.configure(config);

Kotlin

val config = Config(session) config.augmentedFaceMode = Config.AugmentedFaceMode.MESH3D session.configure(config)

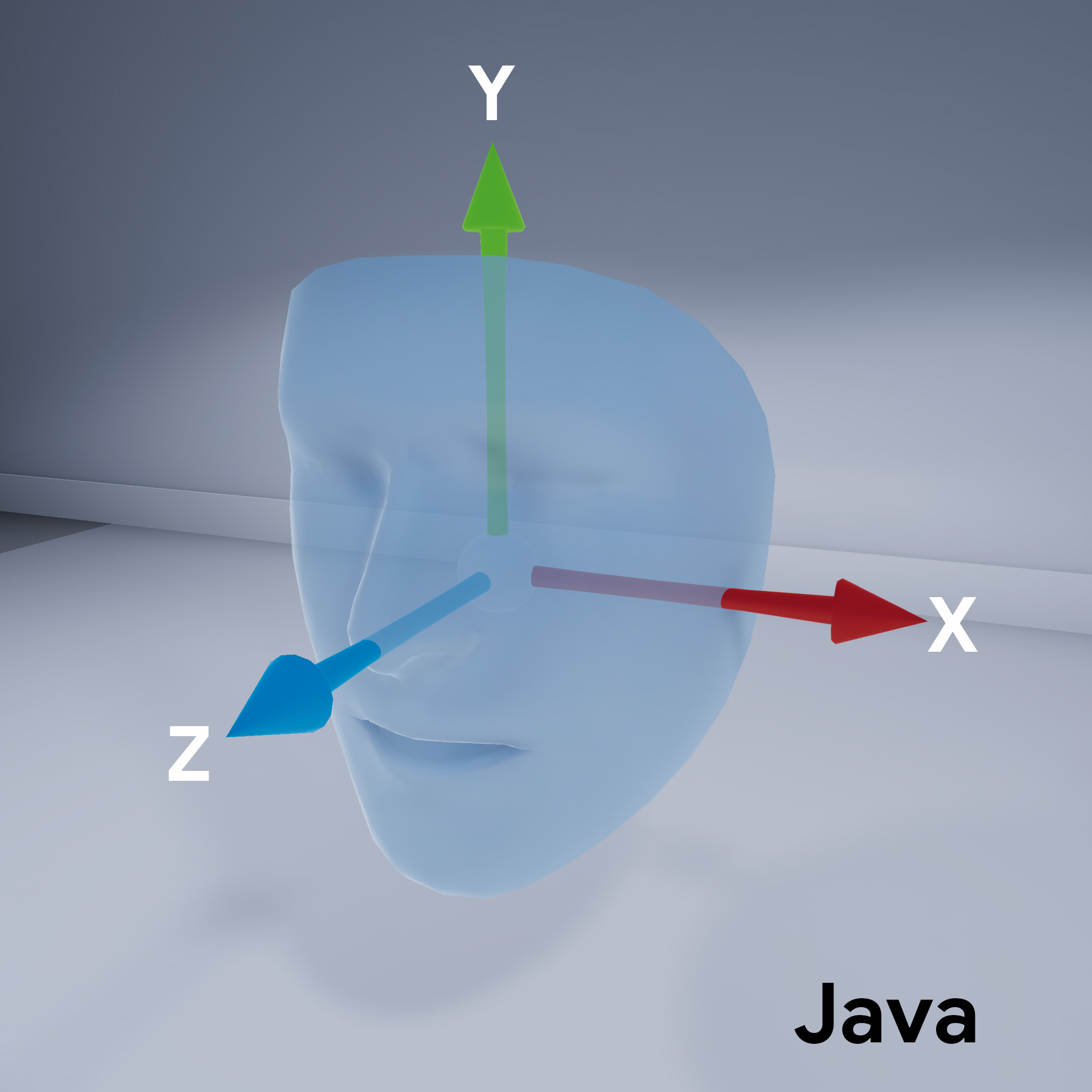

Orientacja siatki twarzy

Zwróć uwagę na orientację siatki twarzy:

Dostęp do wykrytej twarzy

Uzyskaj Trackable

dla każdej klatki. ARCore może śledzić Trackable,

Można dołączać do nich kotwice.

Java

// ARCore's face detection works best on upright faces, relative to gravity. Collection<AugmentedFace> faces = session.getAllTrackables(AugmentedFace.class);

Kotlin

// ARCore's face detection works best on upright faces, relative to gravity. val faces = session.getAllTrackables(AugmentedFace::class.java)

Weź do ręki: TrackingState

dla każdej wartości Trackable. Jeśli jest to TRACKING,

jego pozycja jest obecnie znana firmie ARCore.

Java

for (AugmentedFace face : faces) { if (face.getTrackingState() == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. FloatBuffer uvs = face.getMeshTextureCoordinates(); ShortBuffer indices = face.getMeshTriangleIndices(); // Center and region poses, mesh vertices, and normals are updated each frame. Pose facePose = face.getCenterPose(); FloatBuffer faceVertices = face.getMeshVertices(); FloatBuffer faceNormals = face.getMeshNormals(); // Render the face using these values with OpenGL. } }

Kotlin

faces.forEach { face -> if (face.trackingState == TrackingState.TRACKING) { // UVs and indices can be cached as they do not change during the session. val uvs = face.meshTextureCoordinates val indices = face.meshTriangleIndices // Center and region poses, mesh vertices, and normals are updated each frame. val facePose = face.centerPose val faceVertices = face.meshVertices val faceNormals = face.meshNormals // Render the face using these values with OpenGL. } }