录制和播放简介

使用集合让一切井井有条

根据您的偏好保存内容并对其进行分类。

平台专用指南

绝大多数增强现实体验都是“实时”体验。这类体验要求用户在特定时间位于特定位置,并将手机设置为特殊的 AR 模式并打开 AR 应用。例如,如果用户想查看 AR 沙发在客厅中的外观,则必须在客厅内“放置”沙发,然后在屏幕上查看效果。

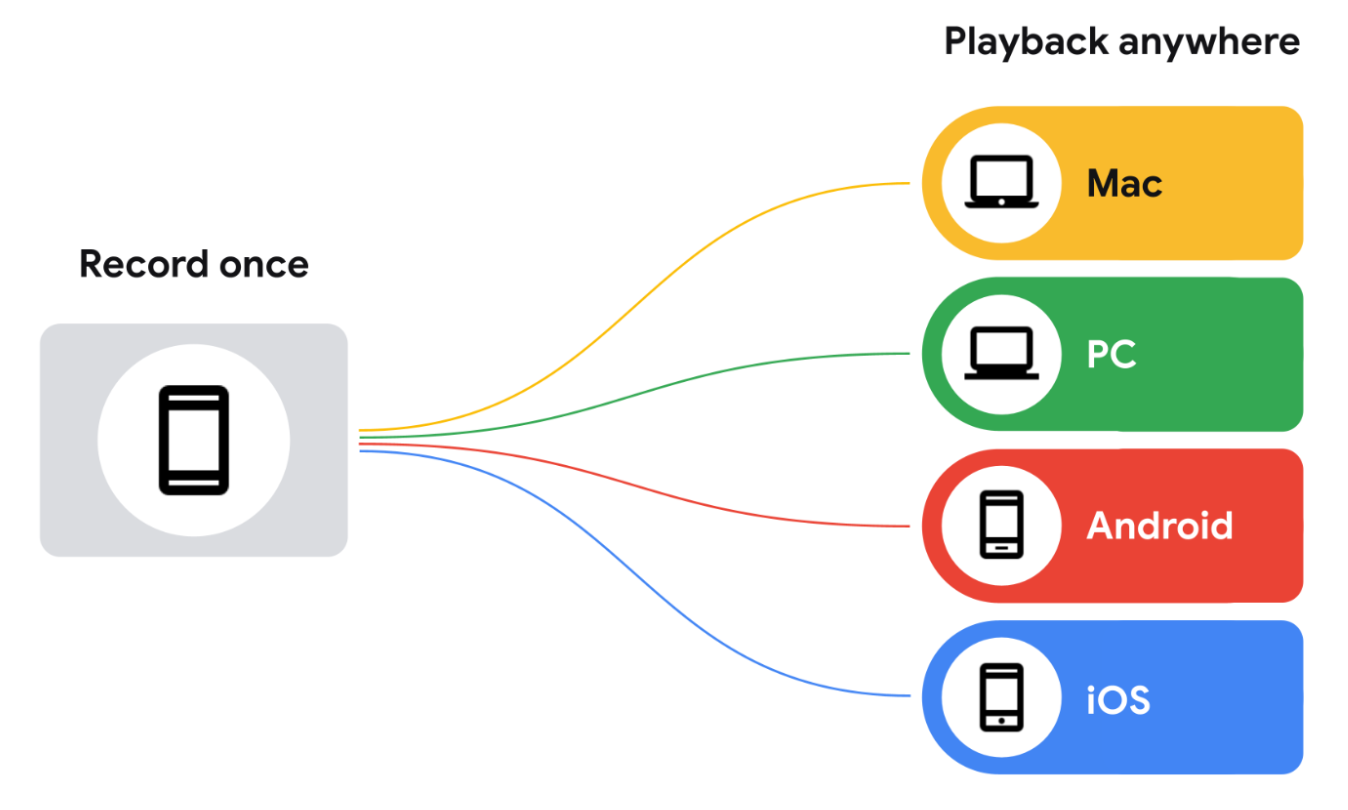

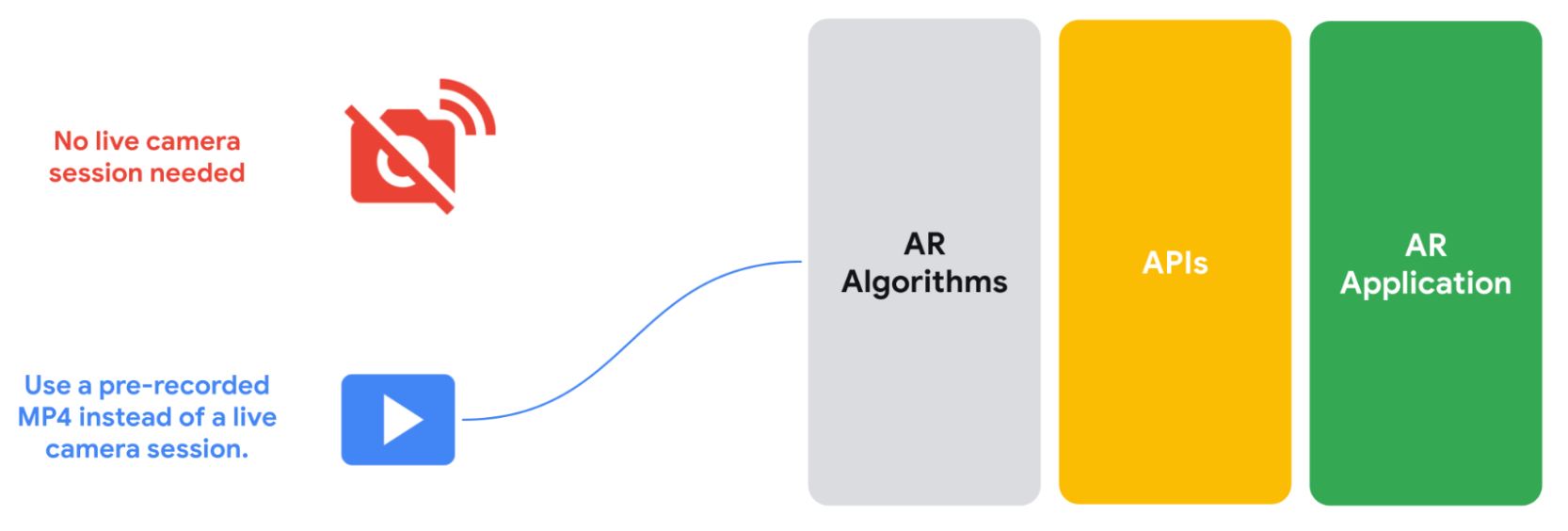

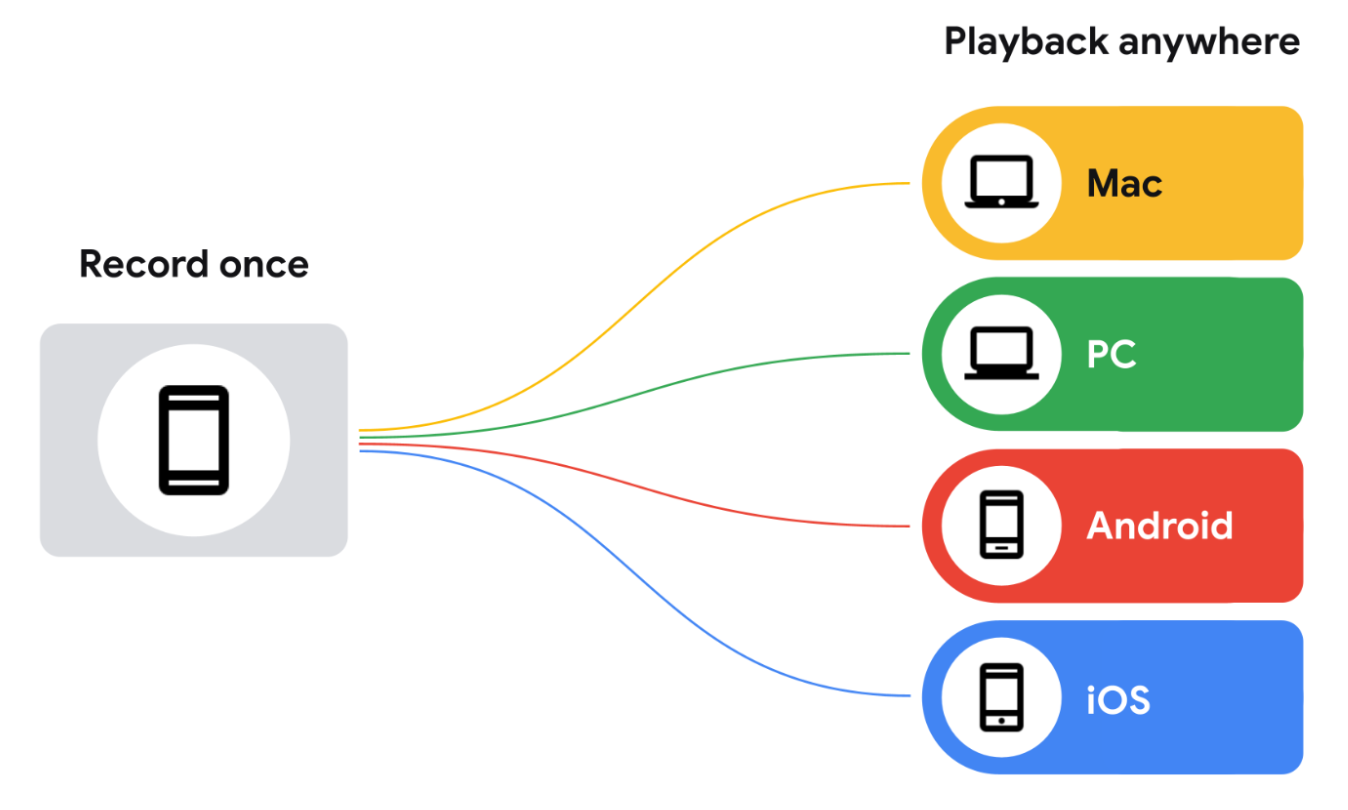

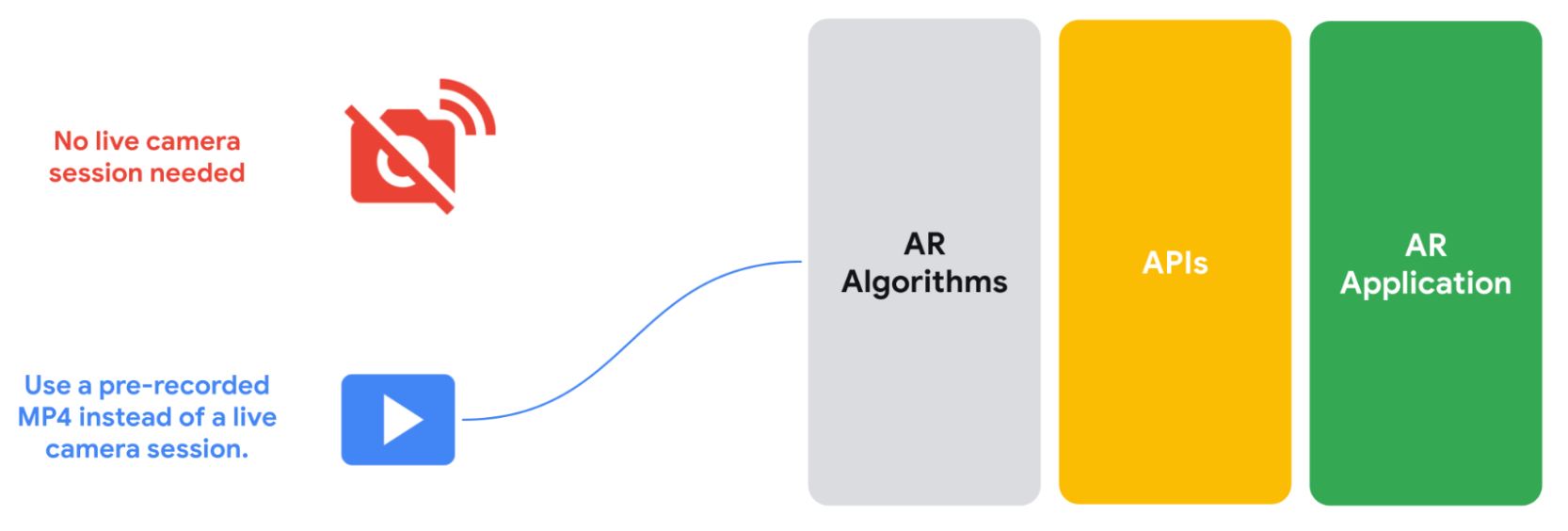

Recording and Playback API 取消了此“实时”要求,让您可以创建可随时随地观看的 AR 体验。Recording API 会存储摄像头的视频流、IMU 数据或您选择保存在 MP4 文件中的任何其他自定义元数据。然后,您可以通过 Playback API 将这些录制的视频馈送给 ARCore,后者会将 MP4 视为实时会话 Feed。您仍然可以使用实时相机会话,但借助这项新 API,您的 AR 应用可以选择使用预录制的 MP4 文件,而不是实时会话。

最终用户也可以使用此功能。无论用户身在何处,都可以从原生图库中调出使用 Recording and Playback API 录制的任何视频,并在其中编辑或播放 AR 对象、特效和滤镜。借助此功能,用户可以在上下班的火车上或躺在床上休息时进行 AR 购物。

使用 Recording API 和 Playback API 进行开发的用例

录制和播放 API 消除了构建 AR 应用的时间和空间限制。下面介绍了在您自己的项目中使用该工具的一些方法。

录制一次,随处测试

您无需每次都亲自前往某个位置来测试 AR 功能,只需使用 Recording API 录制视频,然后使用任何兼容的设备播放即可。在购物中心内打造体验?您无需每次都前往该页面即可测试更改。只需录制一次访问,然后就可以在舒适的办公桌前进行迭代和开发。

缩短迭代时间

您不必为要支持的每部 Android 设备录制视频,只需为要测试的每个场景录制一次视频,然后在迭代阶段将其在多部不同的设备上播放即可。

减轻各个开发团队的手动测试负担

在发布新功能时,请利用预录制的合成数据集,而不是为每个新功能创建自定义数据集,同时还可以利用 ARCore 中的深度或最新跟踪改进功能。

设备兼容性

您需要 ARCore 才能使用 Recording API 和 Playback API 录制数据,但无需 ARCore 即可播放内容。使用此功能录制的 MP4 本质上是包含额外数据的视频文件,可使用任何视频播放器进行观看。您可以使用 Android 的 ExoPlayer 或任何兼容的播放器来检查这些数据,这些播放器既可以解封装 MP4,又可以管理 ARCore 添加的额外数据。

如何录制视频和 AR 数据以进行播放

ARCore 会将录制的会话保存为目标设备上的 MP4 文件。这些文件包含多个视频轨道和其他各种数据。保存这些时段后,您可以指示应用使用这些数据,而不是实时相机时段。

录音中包含哪些内容?

ARCore 会以 H.264 视频的形式捕获以下数据。您可以在任何能够切换轨道的 MP4 兼容视频播放器上访问该视频。分辨率最高的轨道位于列表的开头,因为某些与 MP4 兼容的视频播放器会自动播放列表中的第一个轨道,而不允许您选择要播放的视频轨道。

主要视频轨道(CPU 图片轨道)

主视频文件会录制环境或场景,以供日后播放。默认情况下,ARCore 会将用于动作跟踪的 640x480 (VGA) CPU 图片记录为主要视频串流。

ARCore 不会捕获作为透视摄像头图像渲染到屏幕上的 (高分辨率) GPU 纹理。

如果您希望在播放期间提供高分辨率图片流,则必须配置摄像头,以提供具有所需分辨率的 CPU 图片。在此示例中:

- ARCore 将请求用于运动跟踪的 640x480 (VGA) CPU 图片,以及配置的相机配置指定的高分辨率 CPU 图片。

- 捕获第二个 CPU 映像流可能会影响应用性能,不同设备受到的影响可能有所不同。

- 在播放期间,ARCore 会使用在录制期间捕获的高分辨率 CPU 图片作为播放期间的 GPU 纹理。

- 高分辨率 CPU 图片将成为 MP4 录制内容中的默认视频串流。

录制期间选择的相机配置决定了录制内容中的 CPU 图片和主要视频串流。如果您未选择包含高分辨率 CPU 图片的相机配置,此视频将成为文件中的第一个轨道,并且无论您使用哪个视频播放器,系统都会默认播放此视频。

摄像头深度图可视化

这是一个视频文件,表示相机的深度图,从设备的硬件深度传感器(例如飞行时间传感器 [ToF 传感器])录制,并转换为 RGB 通道值。此视频仅供预览之用。

API 调用事件

ARCore 会记录设备陀螺仪和加速度计传感器的测量结果。它还会记录其他数据,其中一些数据可能属于敏感数据:

- 数据集格式版本

- ARCore SDK 版本

- 面向 AR 的 Google Play 服务版本

- 设备指纹(

adb shell getprop ro.build.fingerprint 的输出)

- 有关用于 AR 跟踪的传感器的更多信息

- 使用 ARCore Geospatial API 时,设备的估算位置、磁力计读数和指南针读数

如未另行说明,那么本页面中的内容已根据知识共享署名 4.0 许可获得了许可,并且代码示例已根据 Apache 2.0 许可获得了许可。有关详情,请参阅 Google 开发者网站政策。Java 是 Oracle 和/或其关联公司的注册商标。

最后更新时间 (UTC):2025-07-26。

[null,null,["最后更新时间 (UTC):2025-07-26。"],[[["\u003cp\u003eThe Recording and Playback API allows developers to create AR experiences that can be viewed anytime, anywhere, by recording AR data into an MP4 file.\u003c/p\u003e\n"],["\u003cp\u003eThis API enables testing AR features without needing to be physically present at a location, reducing iteration time and manual testing efforts.\u003c/p\u003e\n"],["\u003cp\u003eRecordings capture camera video, IMU data, depth map visualizations (if available), and other metadata like device information and sensor readings.\u003c/p\u003e\n"],["\u003cp\u003eDevelopers can leverage pre-recorded datasets for testing across multiple devices or when launching features incorporating depth or tracking improvements.\u003c/p\u003e\n"],["\u003cp\u003eWhile recording requires ARCore, playback can be done using any MP4-compatible video player, including ExoPlayer.\u003c/p\u003e\n"]]],["The Recording and Playback API enables AR experiences without real-time constraints. It records camera video, IMU data, and custom metadata into MP4 files. Developers can use these recordings for testing across devices and locations, reducing development time and manual testing. The API captures data like motion tracking, sensor measurements, and depth maps. Recorded MP4 files contain multiple video tracks, including a primary track with environment/scene footage and can be played back to simulate live camera sessions. AR experiences are no longer limited by the user's current location and time.\n"],null,["# Recording and playback introduction\n\n**Platform-specific guides** \n\n### Android (Kotlin/Java)\n\n- [Record and play back an AR session on Android (Kotlin/Java)](/ar/develop/java/recording-and-playback/developer-guide)\n- [Add custom data while recording](/ar/develop/java/recording-and-playback/custom-data-track)\n\n### Android NDK (C)\n\n- [Record and play back an AR session on Android NDK (C)](/ar/develop/c/recording-and-playback/developer-guide)\n- [Add custom data while recording](/ar/develop/c/recording-and-playback/custom-data-track)\n\n### Unity (AR Foundation)\n\n- [Record and play back an AR session on Unity (AR Foundation)](/ar/develop/unity-arf/recording-and-playback/developer-guide)\n- [Add custom data while recording](/ar/develop/unity-arf/recording-and-playback/custom-data-track)\n\n### Unreal Engine\n\n- [ARCore SDK for Unreal Engine (official documentation)](https://docs.unrealengine.com/5.0/en-US/developing-for-arcore-in-unreal-engine/)\n\nThe vast majority of Augmented Reality experiences are \"real-time.\" They require users to be at a certain place at a certain time, with their phone set in a special AR mode and opened to an AR app. For example, if a user wanted to see how an AR couch looks in their living room, they would have to \"place\" the couch in the on-screen environment while they are physically in the room.\n\nThe Recording and Playback API does away with this \"real-time\" requirement, enabling you to create AR experiences that can be viewed anywhere, at any time. The Recording API stores a camera's video stream, IMU data, or any other custom metadata you choose to save in an MP4 file. You can then feed these recorded videos to ARCore via the Playback API, which will treat the MP4 just like a live session feed. You can still use a live camera session, but with this new API, your AR applications can opt to use a pre-recorded MP4 instead of that live session.\n\nEnd users can also take advantage of this feature. No matter where they are in real life, they can pull up any video recorded with the Recording and Playback API from their native gallery and edit in or play back AR objects, effects, and filters. With this feature, users can do their AR shopping while on a train commuting to the office, or in bed lounging around.\n\nUse cases for developing with the Recording and Playback API\n------------------------------------------------------------\n\nThe Recording and Playback API removes the time and space constraints of building AR apps. Here are some ways you can use it in your own projects.\n\n### Record once, test anywhere\n\nInstead of physically going to a location every time you need to test out an AR feature, you can record a video using the Recording API and then play it back using any compatible device. Building an experience in a shopping mall? There's no need to go there every time you want to test a change. Simply record your visit once and then iterate and develop from the comfort of your own desk.\n\n### Reduce iteration time\n\nInstead of having to record a video for every single Android device you want to support, for every single scenario you want to test, you can record the video once and play it back on multiple different devices during the iteration phase.\n\n### Reduce manual test burden across development teams\n\nInstead of creating custom datasets for every new feature, leverage pre-recorded datasets while launching new features that incorporate depth or the latest tracking improvements from ARCore.\n\nDevice compatibility\n--------------------\n\nYou'll need ARCore to record data with the Recording and Playback API, but you won't need it to play things back. MP4s recorded using this feature are essentially video files with extra data that can be viewed using any video player. You can inspect them with Android's [ExoPlayer](https://exoplayer.dev/), or any compatible player that can both demux MP4s and manage the additional data added by ARCore.\n\nHow video and AR data are recorded for playback\n-----------------------------------------------\n\nARCore saves recorded sessions to MP4 files on the target device. These files contain multiple video tracks and other miscellaneous data. Once these sessions are saved, you can point your app to use this data in place of a live camera session.\n\nWhat's in a recording?\n----------------------\n\nARCore captures the following data in H.264 video. You can access it on any MP4-compatible video player that is capable of switching tracks. The highest-resolution track is the first in the list because some MP4-compatible video players automatically play the first track in the list without allowing you to choose which video track to play.\n\n### Primary video track (CPU image track)\n\nThe primary video file records the environment or scene for later playback. By\ndefault, ARCore records the 640x480 (VGA) CPU image that's used for\n[motion tracking](/ar/discover/concepts#motion_tracking) as the primary video\nstream.\n\nARCore does not capture the (high-resolution) *GPU texture* that is rendered to\nthe screen as the passthrough camera image.\n\nIf you want a high-resolution image stream to be available during playback, you\nmust configure a [camera](/ar/develop/camera) which provides a **CPU** image\nthat has the desired resolution. In this case:\n\n- ARCore will request both the 640x480 (VGA) CPU image that it requires for motion tracking and the high-resolution CPU image specified by the configured camera config.\n- Capturing the second CPU image stream may affect app performance, and different devices may be affected differently.\n- During playback, ARCore will use the high-resolution CPU image that was captured during recording as the **GPU** texture during playback.\n- The high-resolution CPU image will become the default video stream in the MP4 recording.\n\nThe selected camera config during recording determines the CPU image and the primary video stream in the recording. If you don't select a camera config with a high-resolution CPU image, this video will be the first track in the file and will play by default, regardless of which video player you use.\n\n### Camera depth map visualization\n\nThis is a video file representing the camera's depth map, recorded from the device's hardware depth sensor, such as a time-of-flight sensor (or ToF sensor), and converted to RGB channel values. This video should only be used for preview purposes.\n\nAPI call events\n---------------\n\nARCore records measurements from the device's gyrometer and accelerometer sensors. It also records other data, some of which may be sensitive:\n\n- Dataset format versions\n- ARCore SDK version\n- Google Play Services for AR version\n- Device fingerprint (the output of `adb shell getprop ro.build.fingerprint`)\n- Additional information about sensors used for AR tracking\n- When using the [ARCore Geospatial API](/ar/develop/geospatial), the device's estimated location, magnetometer readings, and compass readings"]]