了解如何使用增强人脸在您自己的应用中在人脸上渲染素材资源。

前提条件

在继续操作之前,请确保您了解基本 AR 概念以及如何配置 ARCore 会话。

检测人脸

面部由 ARFace 对象表示,这些对象由 ARFaceManager 创建、更新和移除。ARFaceManager 会每帧调用一次 facesChanged 事件,该事件包含三个列表:自上一个帧以来添加的面孔、更新的面孔和移除的面孔。当 ARFaceManager 在场景中检测到人脸时,它会实例化一个带有 ARFace 组件的预设对象,以跟踪人脸。预设对象可以保留为 null。

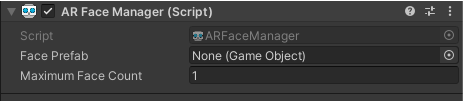

如需设置 ARFaceManager,请创建一个新的游戏对象,并向其添加 ARFaceManager。

Face Prefab 是在面部中心姿势处实例化的预设对象。Maximum Face Count 表示可跟踪的面孔数量上限。

访问检测到的人脸

通过连接到人脸预设的 ARFace 组件访问检测到的人脸。ARFace 提供顶点、索引、顶点法线和纹理坐标。

检测到的人脸的各个部分

Augmented Faces API 提供一个中心姿势、三个区域姿势和一个 3D 人脸网格。

居中姿势

中心姿势(标记用户头部的中心)是 ARFaceManager 实例化的 Prefab 的原点。它位于颅骨内,鼻子后面。

中心姿势的轴如下所示:

- 正 X 轴 (X+) 指向左耳

- 正 Y 轴 (Y+) 指向屏幕外向上

- 正 Z 轴 (Z+) 指向头部中心

区域姿势

区域姿势位于左侧额头、右侧额头和鼻尖,用于标记用户脸部的重要部位。区域姿势遵循与中心姿势相同的轴方向。

如需使用区域姿势,请将 ARFaceManager 的子系统向下转换为 ARCoreFaceSubsystem,然后使用 subsystem.GetRegionPoses() 获取每个区域的姿势信息。如需查看实现方法示例,请参阅 GitHub 上的 Unity 使用示例。

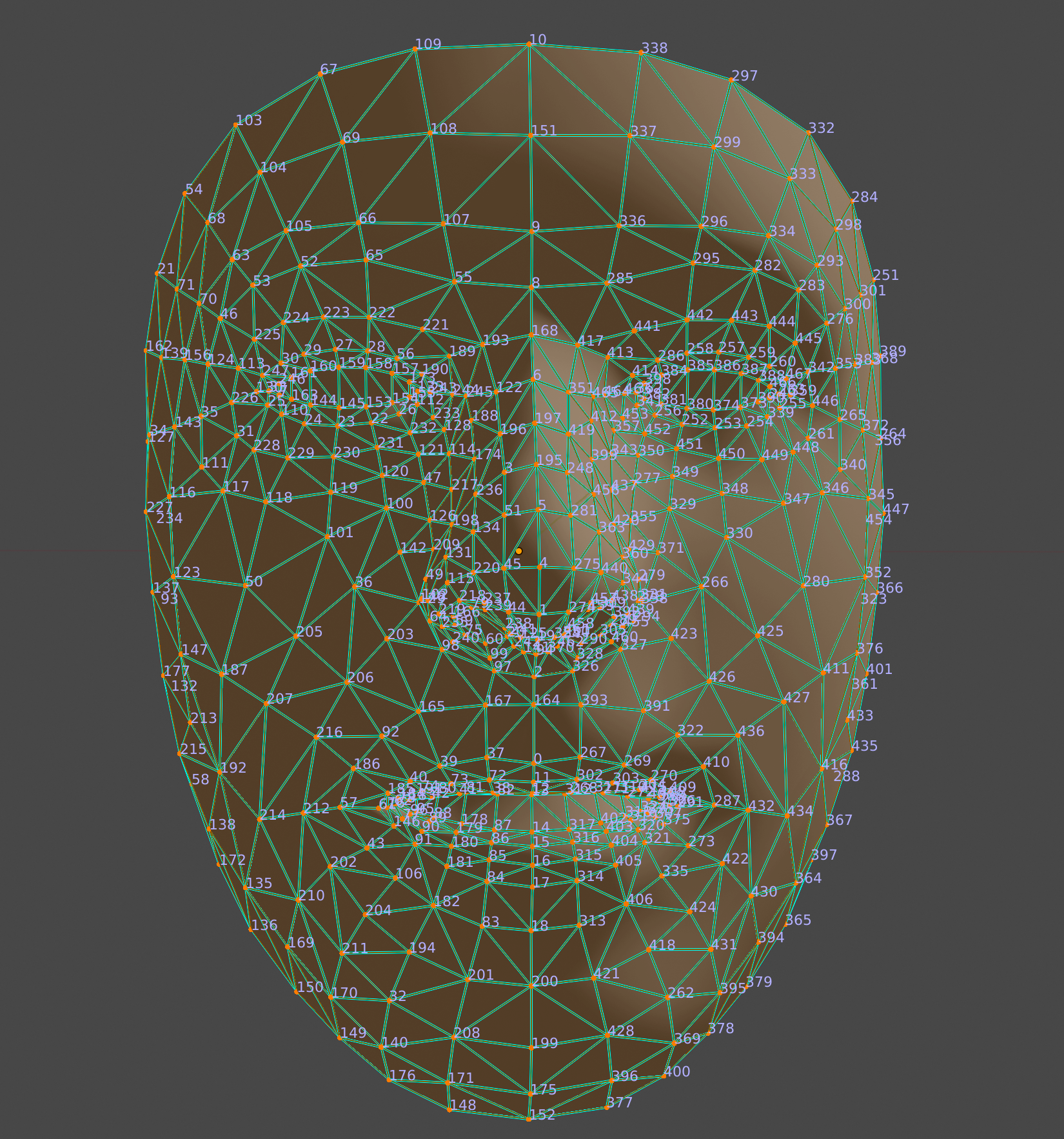

3D 人脸网格

人脸网格由 468 个点组成,这些点构成了人脸。它也相对于中心姿势定义。

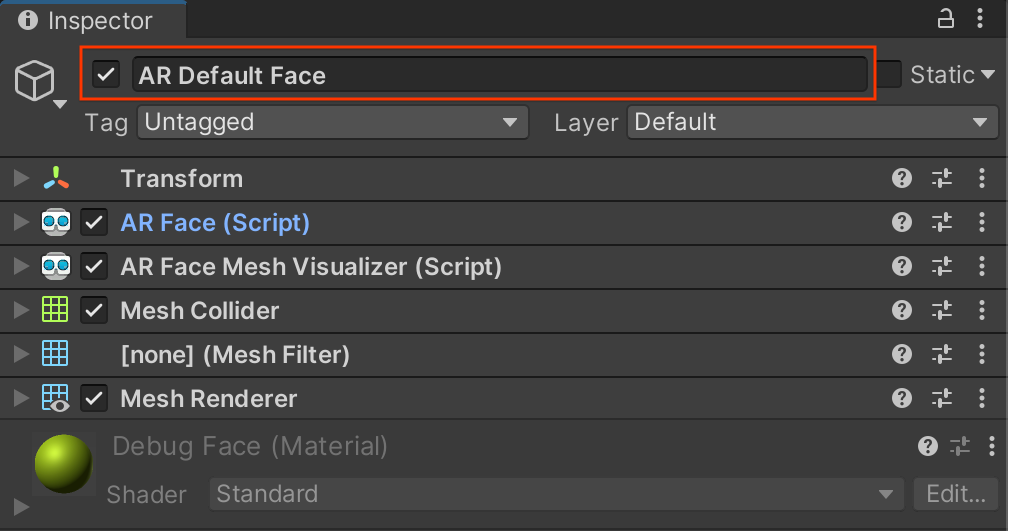

如需直观呈现面部网格,请将 ARFaceMeshVisualizer 附加到 Face Prefab。ARFaceMeshVisualizer 将生成与检测到的人脸对应的 Mesh,并将其设置为附加的 MeshFilter 和 MeshCollider 中的网格。使用 MeshRenderer 设置用于渲染人脸的 Material。

AR Default Face Prefab 会在检测到的面部网格上渲染默认材质。

请按照以下步骤开始使用 AR 默认人脸:

- 设置

ARFaceManager。 在 Hierarchy 标签页中,依次选择 + > XR > AR Default Face 以创建新的 Face 对象。此对象是临时的,创建 Face 预设文件后即可删除。

在 Inspector 中访问 AR Default Face。

将新创建的 AR 默认脸部从 Hierarchy 标签页拖动到 Project Assets 窗口,以创建预设对象。

在

ARFaceManager的 Face Prefab 字段中,将新创建的 Prefab 设置为 Face Prefab。在 Hierarchy 标签页中,删除面部对象,因为我们已不再需要它。

访问面部网格的各个顶点

使用 face.vertices 访问面部网格的顶点位置。使用 face.normals 访问相应的顶点法向量。

直观呈现面部网格的各个顶点

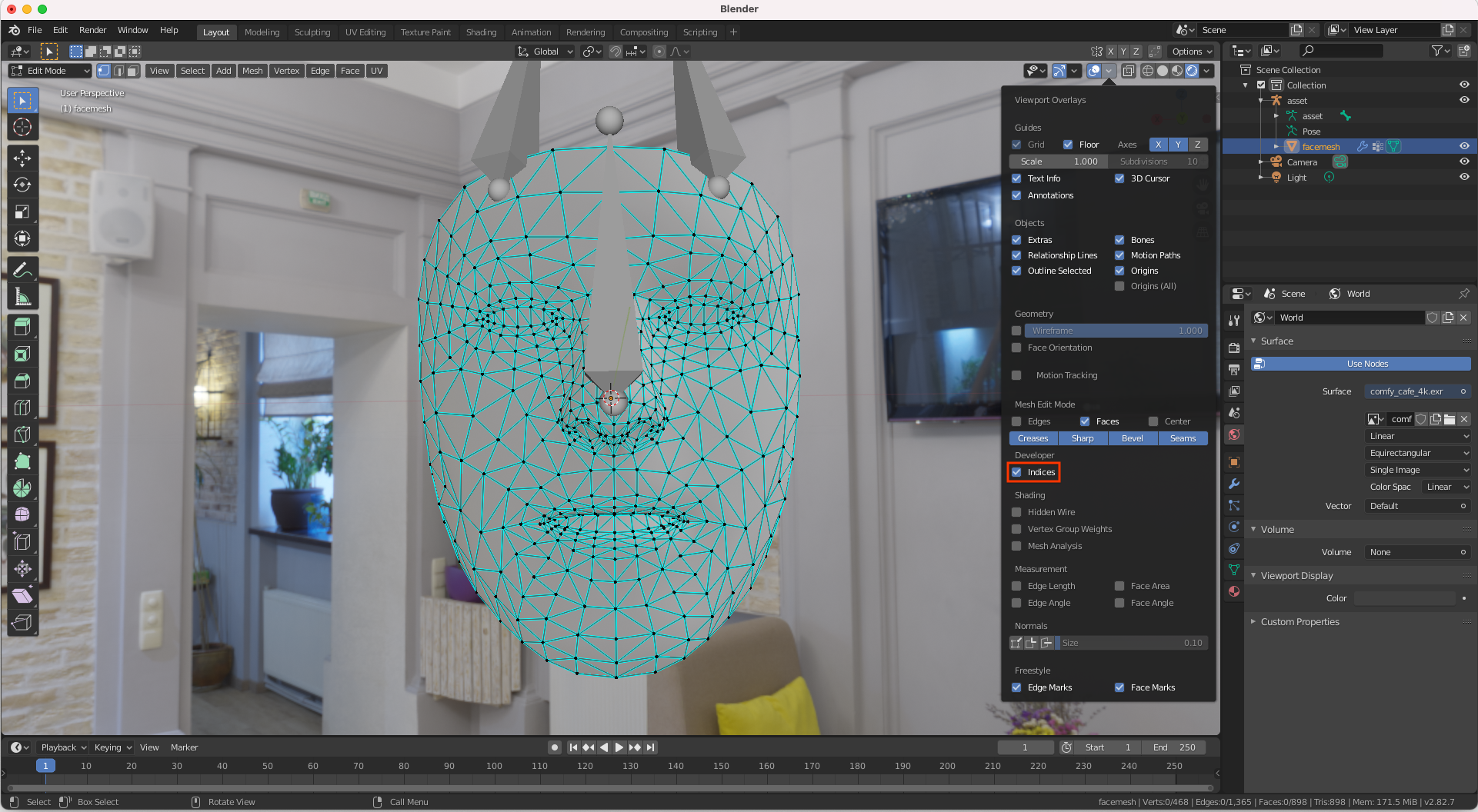

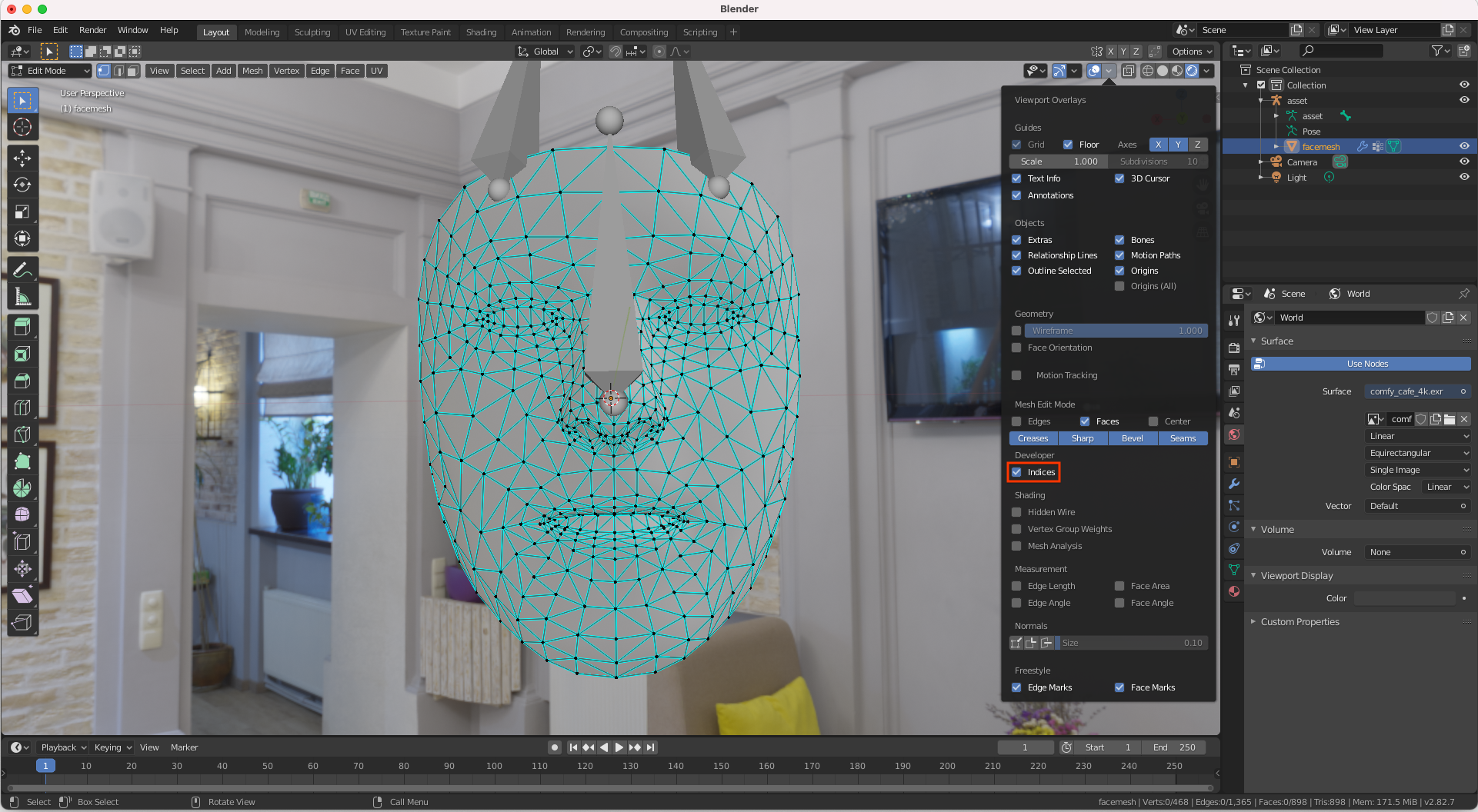

您可以使用 Blender 轻松查看与面网格顶点对应的索引编号:

- 打开 Blender,然后从 GitHub 导入

canonical_face_mesh.fbx。 - 导航到 Edit > Preferences > Interface。

在 Display 菜单下,选择 Developer Extras。

在 3D 视口中点击相应面进行选择,然后按 Tab 键进入“编辑”模式。

打开 Overlays 视口旁边的下拉菜单,然后选择 Indices。

突出显示您要确定索引编号的顶点。如需突出显示所有顶点,请使用 Select > All。