2008年1月24日星期四

发表者:Vanessa Fox

原文: Requesting removal of content from our index

发表于:2007年4月17日,星期二,下午4时04分

(译者注: 本文讲述了如何申请从谷歌的索引中删除内容,包括你自己拥有的内容及其他你所不拥有但是包含特殊信息的内容,如不健康内容或你的个人信息)

作为网站拥有者,网站的什么内容被搜索引擎索引,你完全可以控制。当你想让搜索引擎知道什么样的内容您不希望它们索引时,最简单的方法是使用 robots.txt文件或robots元标记 。但有时候,你想要删除已经被索引的内容。有什么最好的方法来做到这一点呢?

同以往一样,我们的回答总是这样开始:这取决于你想要删除的内容的类型。我们的网络管理员帮助中心提供了每种情况的 详细资料 。每当我们重新爬行该网页, 我们就会从我们的索引中自动移去你要删除的内容。但如果你想更快地删除你的内容,而不是等待下一次的爬行,我们刚刚有了一些方法使做到这一点变得更为容易。

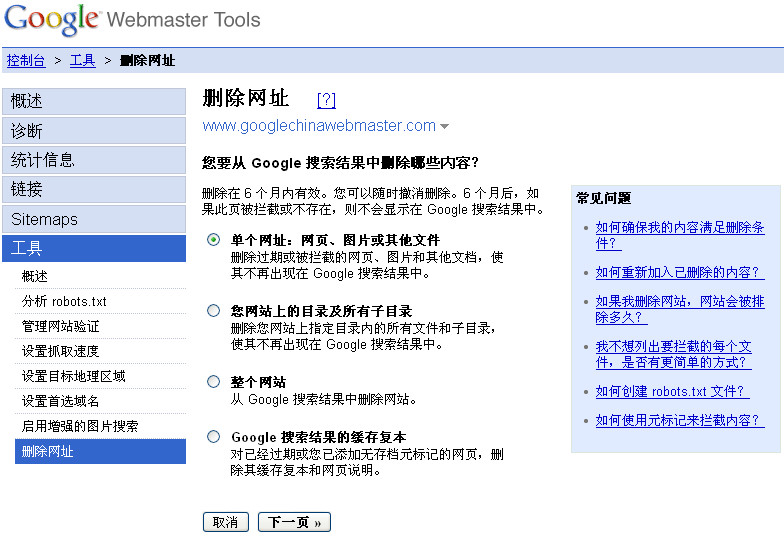

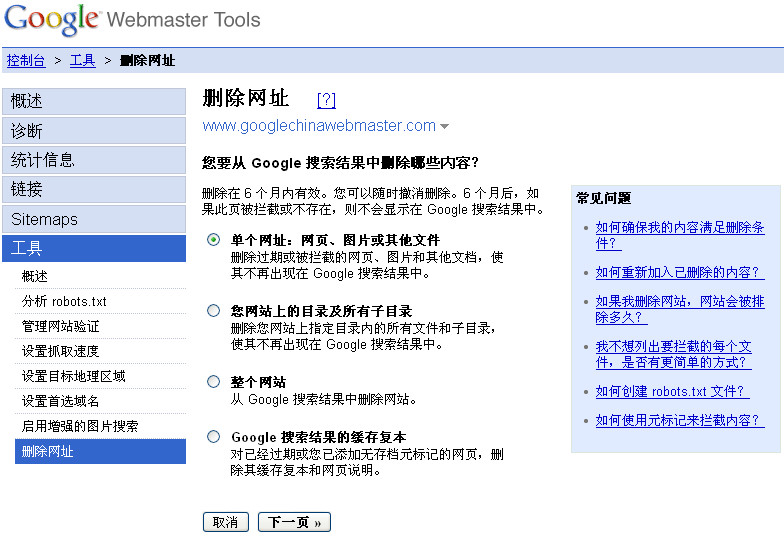

如果你的网站已经通过了 网站管理员工具 帐号的网站拥有者验证,你就会看到在"工具"下有一个删除网址链接。要想删除,你可以点击 删除网址 链接,然后再点击 新增删除请求 。请选择你想要的删除类型。

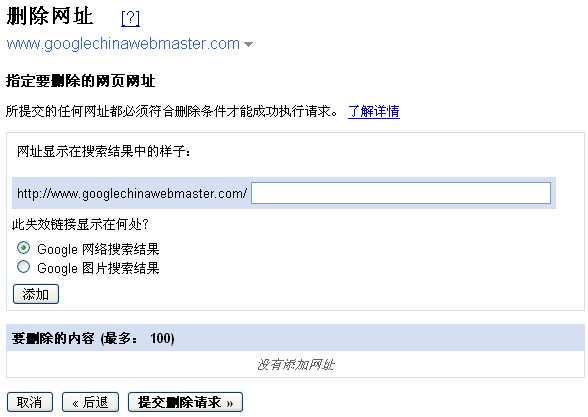

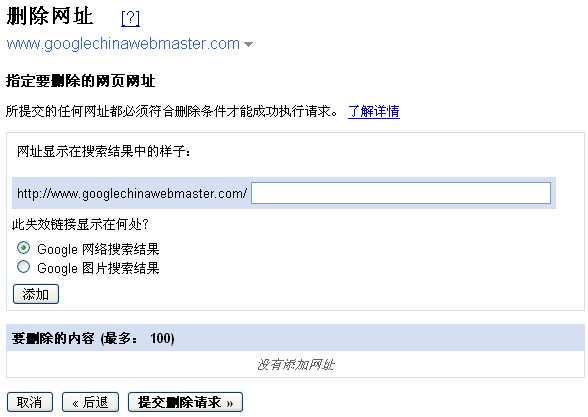

单个网址

如果你想移除一个URL或图像,请选择此项。一个可以删除的URL必须符合以下条件之一: 如果一个URL可以被删除了,你输入该URL,看看它是否出现在我们的网页搜索结果或图像搜索结果里。然后点击

添加

。您可以一次添加多达100个URL的请求。当你添加完所有你想删除的URL后,点击“

提交删除请求

”。

如果一个URL可以被删除了,你输入该URL,看看它是否出现在我们的网页搜索结果或图像搜索结果里。然后点击

添加

。您可以一次添加多达100个URL的请求。当你添加完所有你想删除的URL后,点击“

提交删除请求

”。

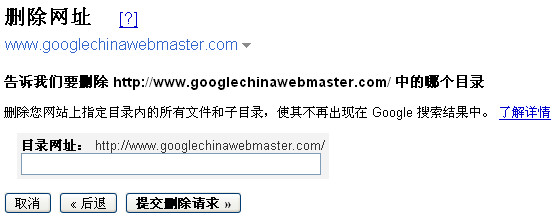

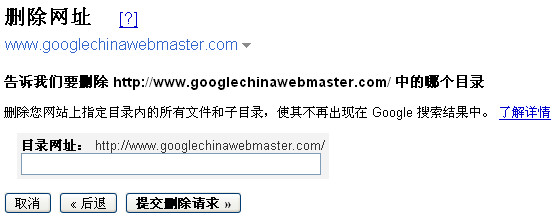

单个目录

如果你想删除你站点的一个目录下的所有文件和子目录,请选择此选项。例如,如果你请求删除以下内容:

https://www.example.com/myfolder

这将删除所有以该路径为开头的URL,譬如:

https://www.example.com/myfolder

https://www.example.com/myfolder/page1.html

https://www.example.com/myfolder/images/image.jpg

为了使目录可以被清除,你必须用robots.txt文件来阻截搜索引擎。例如,上面的例子中, https://www.example.com/robots.txt可以包括以下内容:

User-agent: Googlebot

Disallow: /myfolder

你的整个网站

只有当你想从Google索引删除你的整个网站时,才选 择此选项。此选项将删除所有子目录及文件。对于你网站的被索引的URL中你不喜欢的版本,请不要使用此选项来删除。举例来 说,如果你想你的全部URL只有www的版本才被索引,请不要使用这一选项来请求删除非www的版本。你可以使用 设置首选域名工具 来指定你希望被索引的版本(如果可能的话,做一个301重定向到你喜欢的版本)。使用此选项,你必须 使用robots.txt文件拦截或删除整个网站 。

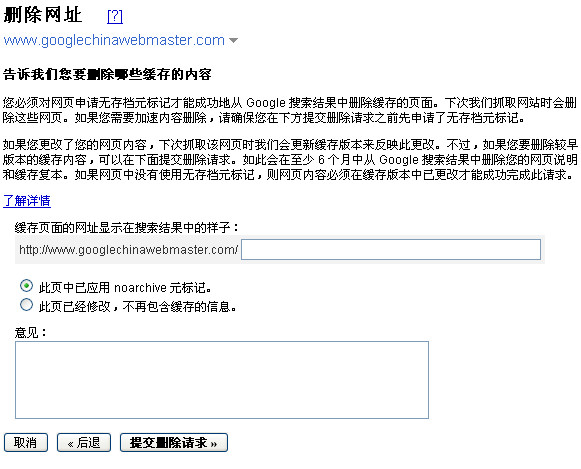

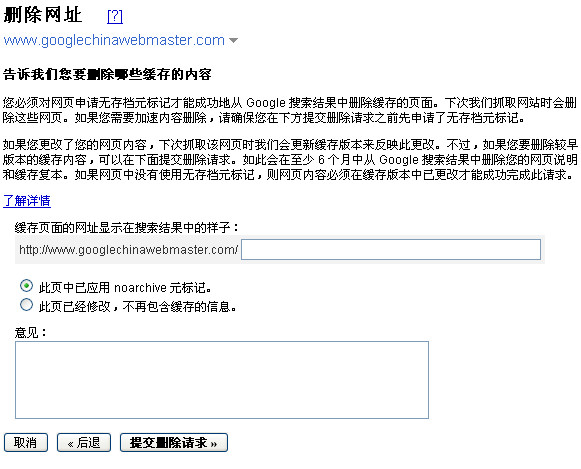

缓存副本

要删除你的网页在我们索引中的缓存副本(又称网页快照--译者注),请选择此项。你有两种方法来使你的页面符合删除页面缓存的条件。

使用noarchive元标记来要求快速删除

如果你根本不想让你的页面被缓存,你可以在该页面上加一个 noarchive元标记 ,然后再在工具中要求快速删除缓存副本。通过使用工具来要求删除缓存副本,我们会立刻执行。由于添加了noarchive元标记,我们将永远不会有该页的缓存版本。 (当然,如果你以后改变主意,你可以去掉noarchive元标记)。

改变网页内容

如果你的某一页面已被删除,你也不想让它的缓存版本存在于Google的索引中,你可以在工具里请求删除缓存。我们会先检查一下该页的现有内容是否真的有别于缓存版本。如果是,我们就会清除缓存版本。我们会在6个月后 自动显示最新的缓存页面版本(6个月后,我们可能已经又爬行过你的页面,缓存版本会反映最新的内容) ,或者,如果你发现我们早于6个月重新爬行了你的页面,你可以用工具要求我们早一点重新包含缓存版本。

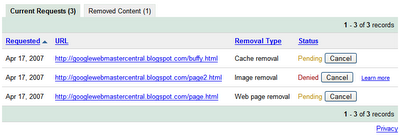

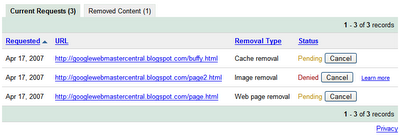

查阅删除请求状态

你的删除请求状态将是“等待中”,直到他们被处理。处理后的状态变化,要么是“被拒了”或者是“删除了”。一般来说,如果被拒绝,它一定是不满足被删除的条件。

请求内容的重新收录

如果请求是成功的,它就会出现在删掉的内容栏里。你可以随时重新收录你的网页,只要删掉robots.txt中的相关内容或相关页上的robots元标记,然后点击 Reinclude 。删除内容的有效期是六个月。六个月后,如果我们重新爬行网页时网页内容仍然是被阻截的或者返回一个404或410状态信息,它就不会被重新索引。不过,如果六个月后该页面可以被我们的抓取工具抓取,我们将再次把它列入我们的索引。

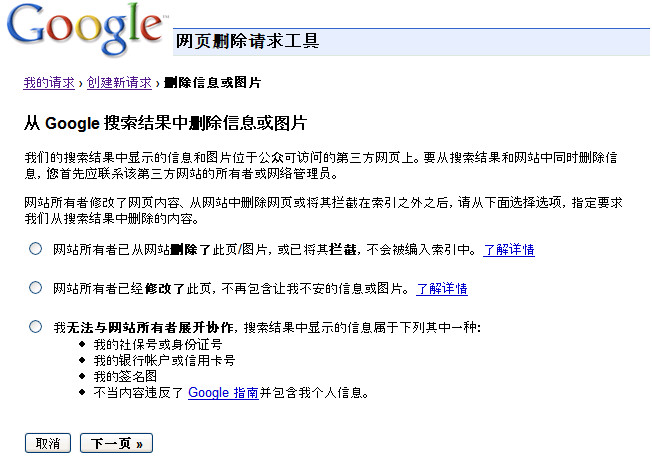

请求删除不是你拥有的内容

请求删除不是你拥有的内容

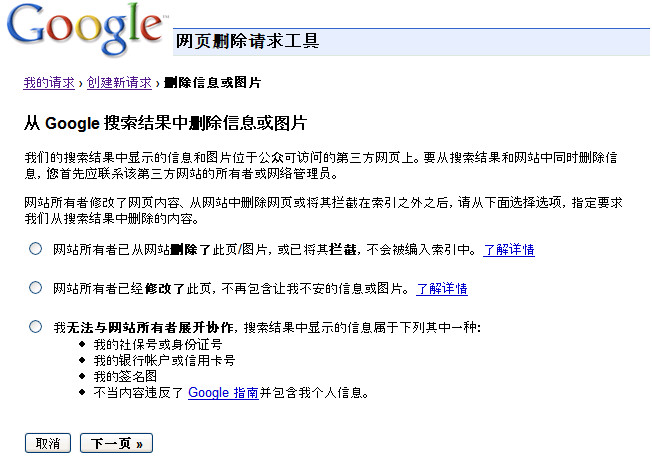

如果您想要求删除的内容在不属于你的网站上,怎么办呢?现在做到这一点更容易了。我们的新的 网页删除请求工具 逐步和你完成每个类型的删除过程。

因为Google仅仅索引网页,并不能控制网页的内容,我们通常不能从我们的索引中随便删除一些结果,除非网络管理员阻截Google、修改了内容或删除了页面。如果您想删除某些内容,你可以和网站所有者进行一下沟通,然后用此工具来加速从我们的搜索结果删除。

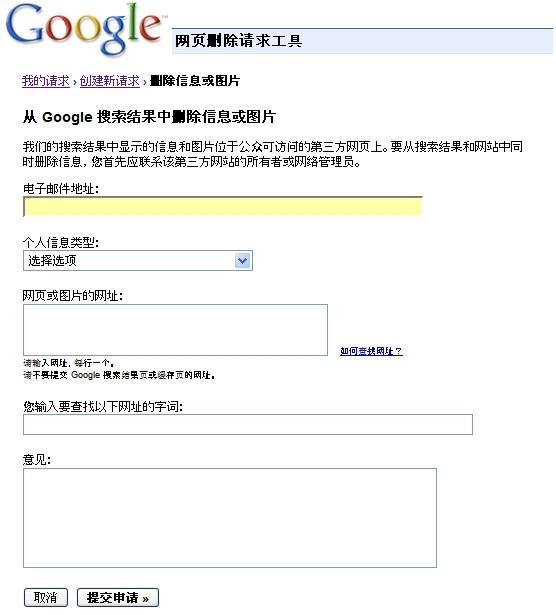

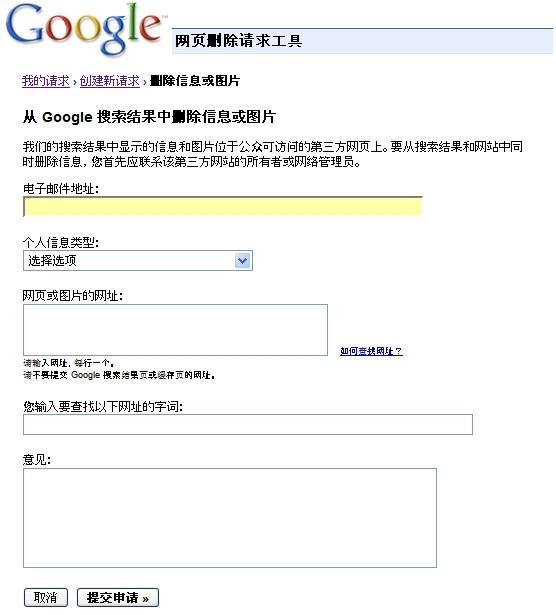

但是,如果您发现搜索结果中包含特定类型的个人信息,你可以请求删除,即使你不能和网站所有者沟通。对于这种类型的删除,请提供您的电子邮件地址,以便我们能够与您直接沟通。

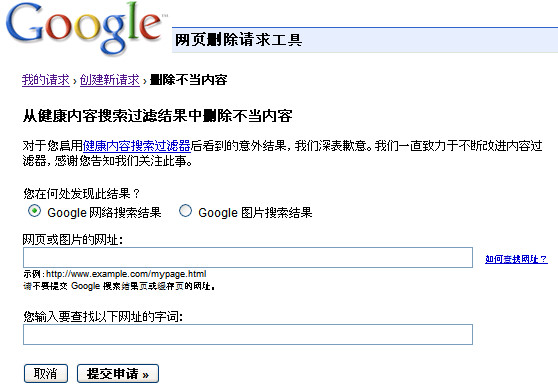

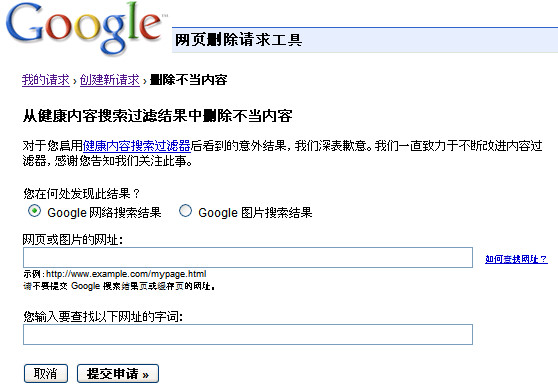

当启动健康内容搜索时,如果您发现一些搜索结果中有不健康的内容,你也可以使用工具通知我们。

你可以查阅“等候中”请求的状态。在当前网站管理员工具的版本中,一旦请求被处理,请求状态将会变成“已删除”或“拒绝”。一般来说,如果被拒 绝,它一定是不满足被删除的条件。对涉及个人信息的请求,您看不到任何状态,但是你会收到一封电子邮件来要求你为以后的步骤提供更多的资料。

老的URL删除工具中的请求会有什么样的结果呢?

如果您已经用老的URL删除工具提交了删除请求,你仍然可以登录来查阅这些请求的状态。但是,如果你有新的请求,请使用现在的新的和改进的工具版本。

原文: Requesting removal of content from our index

发表于:2007年4月17日,星期二,下午4时04分

(译者注: 本文讲述了如何申请从谷歌的索引中删除内容,包括你自己拥有的内容及其他你所不拥有但是包含特殊信息的内容,如不健康内容或你的个人信息)

作为网站拥有者,网站的什么内容被搜索引擎索引,你完全可以控制。当你想让搜索引擎知道什么样的内容您不希望它们索引时,最简单的方法是使用 robots.txt文件或robots元标记 。但有时候,你想要删除已经被索引的内容。有什么最好的方法来做到这一点呢?

同以往一样,我们的回答总是这样开始:这取决于你想要删除的内容的类型。我们的网络管理员帮助中心提供了每种情况的 详细资料 。每当我们重新爬行该网页, 我们就会从我们的索引中自动移去你要删除的内容。但如果你想更快地删除你的内容,而不是等待下一次的爬行,我们刚刚有了一些方法使做到这一点变得更为容易。

如果你的网站已经通过了 网站管理员工具 帐号的网站拥有者验证,你就会看到在"工具"下有一个删除网址链接。要想删除,你可以点击 删除网址 链接,然后再点击 新增删除请求 。请选择你想要的删除类型。

单个网址

如果你想移除一个URL或图像,请选择此项。一个可以删除的URL必须符合以下条件之一:

- 该URL必须返回一个 404 或 410 的状态码。

- 该URL必须被该网站的 robots.txt文件 所阻截。

- 该URL必须被 robots元标记 所阻截。

如果一个URL可以被删除了,你输入该URL,看看它是否出现在我们的网页搜索结果或图像搜索结果里。然后点击

添加

。您可以一次添加多达100个URL的请求。当你添加完所有你想删除的URL后,点击“

提交删除请求

”。

如果一个URL可以被删除了,你输入该URL,看看它是否出现在我们的网页搜索结果或图像搜索结果里。然后点击

添加

。您可以一次添加多达100个URL的请求。当你添加完所有你想删除的URL后,点击“

提交删除请求

”。

单个目录

如果你想删除你站点的一个目录下的所有文件和子目录,请选择此选项。例如,如果你请求删除以下内容:

https://www.example.com/myfolder

这将删除所有以该路径为开头的URL,譬如:

https://www.example.com/myfolder

https://www.example.com/myfolder/page1.html

https://www.example.com/myfolder/images/image.jpg

为了使目录可以被清除,你必须用robots.txt文件来阻截搜索引擎。例如,上面的例子中, https://www.example.com/robots.txt可以包括以下内容:

User-agent: Googlebot

Disallow: /myfolder

你的整个网站

只有当你想从Google索引删除你的整个网站时,才选 择此选项。此选项将删除所有子目录及文件。对于你网站的被索引的URL中你不喜欢的版本,请不要使用此选项来删除。举例来 说,如果你想你的全部URL只有www的版本才被索引,请不要使用这一选项来请求删除非www的版本。你可以使用 设置首选域名工具 来指定你希望被索引的版本(如果可能的话,做一个301重定向到你喜欢的版本)。使用此选项,你必须 使用robots.txt文件拦截或删除整个网站 。

缓存副本

要删除你的网页在我们索引中的缓存副本(又称网页快照--译者注),请选择此项。你有两种方法来使你的页面符合删除页面缓存的条件。

使用noarchive元标记来要求快速删除

如果你根本不想让你的页面被缓存,你可以在该页面上加一个 noarchive元标记 ,然后再在工具中要求快速删除缓存副本。通过使用工具来要求删除缓存副本,我们会立刻执行。由于添加了noarchive元标记,我们将永远不会有该页的缓存版本。 (当然,如果你以后改变主意,你可以去掉noarchive元标记)。

改变网页内容

如果你的某一页面已被删除,你也不想让它的缓存版本存在于Google的索引中,你可以在工具里请求删除缓存。我们会先检查一下该页的现有内容是否真的有别于缓存版本。如果是,我们就会清除缓存版本。我们会在6个月后 自动显示最新的缓存页面版本(6个月后,我们可能已经又爬行过你的页面,缓存版本会反映最新的内容) ,或者,如果你发现我们早于6个月重新爬行了你的页面,你可以用工具要求我们早一点重新包含缓存版本。

查阅删除请求状态

你的删除请求状态将是“等待中”,直到他们被处理。处理后的状态变化,要么是“被拒了”或者是“删除了”。一般来说,如果被拒绝,它一定是不满足被删除的条件。

请求内容的重新收录

如果请求是成功的,它就会出现在删掉的内容栏里。你可以随时重新收录你的网页,只要删掉robots.txt中的相关内容或相关页上的robots元标记,然后点击 Reinclude 。删除内容的有效期是六个月。六个月后,如果我们重新爬行网页时网页内容仍然是被阻截的或者返回一个404或410状态信息,它就不会被重新索引。不过,如果六个月后该页面可以被我们的抓取工具抓取,我们将再次把它列入我们的索引。

请求删除不是你拥有的内容

请求删除不是你拥有的内容

如果您想要求删除的内容在不属于你的网站上,怎么办呢?现在做到这一点更容易了。我们的新的 网页删除请求工具 逐步和你完成每个类型的删除过程。

因为Google仅仅索引网页,并不能控制网页的内容,我们通常不能从我们的索引中随便删除一些结果,除非网络管理员阻截Google、修改了内容或删除了页面。如果您想删除某些内容,你可以和网站所有者进行一下沟通,然后用此工具来加速从我们的搜索结果删除。

但是,如果您发现搜索结果中包含特定类型的个人信息,你可以请求删除,即使你不能和网站所有者沟通。对于这种类型的删除,请提供您的电子邮件地址,以便我们能够与您直接沟通。

当启动健康内容搜索时,如果您发现一些搜索结果中有不健康的内容,你也可以使用工具通知我们。

你可以查阅“等候中”请求的状态。在当前网站管理员工具的版本中,一旦请求被处理,请求状态将会变成“已删除”或“拒绝”。一般来说,如果被拒 绝,它一定是不满足被删除的条件。对涉及个人信息的请求,您看不到任何状态,但是你会收到一封电子邮件来要求你为以后的步骤提供更多的资料。

老的URL删除工具中的请求会有什么样的结果呢?

如果您已经用老的URL删除工具提交了删除请求,你仍然可以登录来查阅这些请求的状态。但是,如果你有新的请求,请使用现在的新的和改进的工具版本。